Introduction to Azure IoT (an MVA course)

One of my university professors once said that “Software is the most complex creation of man.”

I think I’m drawn to software development and to technology in general precisely because it’s complex. It’s a field I know I’ll never reach the extents of. It will never run up against boundaries with how creative I can be with technology, and I’ll never run out of new concepts to learn.

So that’s what I love to do - to be involved with learning and teaching technology. That’s why I usually say opportunities to present online learning courses.

In April 2017, presented a course on Microsoft Virtual Academy called Introduction to Azure IoT.

The course served to introduce curious viewers to IoT in general as well as to the broad offerings of Azure in the area of IoT, and it also served to introduce viewers to the more in-depth course on the same subject available on the edX platform. Jump over to Developing IoT Solutions Using Azure IoT (DEV225) on edX now.

You can download this PowerPoint deck to get a deeper sense of what was covered as well as to get a reference to the various external links that I used.

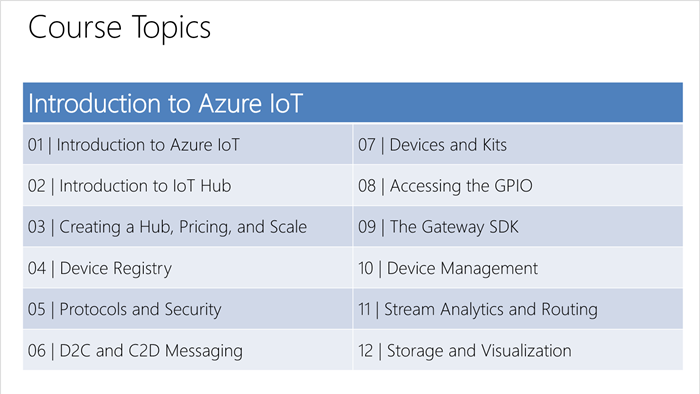

Here are the topics…

Hope this helps you ramp up on IoT!

Dynamic Bot Dialogs

Please note that this article’s age is showing as it’s talking about Bot Framework v3. The subsequent version of Bot Framework works entirely differently.

I’m having a lot of fun developing against botbuilder - the Node.js SDK for the bot framework.

When you’re learning to make bots, you study and build a lot of simple bots that do very little. In this case, it makes good sense to simply define the bot’s dialogs in the same file where you do everything else - the file you may call server.js or app.js or index.js. But if you are working on a bot with enough complexity or bulk to the dialogs, you’ll want to settle on a pattern.

Encapsulated Dialogs

The first pattern embraced I learned from @pveller‘s excellent ecommerce-chatbot. In fact, I learned a lot of good patterns from this bot.

In the ecommerce-chatbot bot, Pavel breaks each dialog out into a separate JavaScript file and wraps them in a separate module. Then from the main page, he calls out to those modules, passing in the bot, and “wires up” the dialog to the bot within that separate module.

Notice in the following code that the main app.js file configures the dialog by requiring it and then calling the returned function passing in the bot object. That allows the dialog to use the bot internally (even though it’s a separate module) to call bot.dialog() and define the dialog functions.

//simplified from https://github.com/pveller/ecommerce-chatbot |

The result is a much more concise app.js file and a bit of welcome encapsulation. The dialogs handle themselves and nothing more.

Dynamically Loaded Dialogs

Later, while I was working with Johnson and Johnson on a bot, I developed a pattern for dynamically loading dialogs based simply on a) their presence in the dialogs project folder and b) their conformation to a simple pattern.

To create a new dialog, then, here’s all I need to do…

module.exports = function (name, bot) { |

The convention I need to follow is to define a module with a function that accepts both a name and a bot object.

That function then calls the dialog() method on the bot just like before, but it uses the name that’s passed in as a) the dialog route and b) the trigger action. This means that if the dialog is called greeting, then it will be triggered whenever an action called greeting fires.

So far, this is a small advantage, but look at how I load this and the other dialogs…

getFileNames('./app/dialogs') |

The getFileNames function is my own, but it simply reads the path you pass in recursively returning all .js files.

The .map() calls require on the path of each found file and adds the resulting export (in our case here the modules are exporting a function) to the array as a property called fx.

Finally, we call .forEach() on this and actually execute the function. This configures the dialog for our bot.

The overall result then is the ability to add dialogs to the bot without any wiring. You just create a new dialog, give it a filename that makes good sense in your application, and it should be loaded and ready to be targeted.

You may not get enough context from these snippets to implement this if that’s what you want to do, so check out a fuller sample in the botstarter repo that @danielegan is working on. The botstarter repo is designed to be a good starting point for creating bots.

A Tale of Two Gateways

Two Types of Gateways

There are two types of gateways in the IoT (Internet of Things) world.

The first is a field gateway. It’s called such because it resides in the “field” - that is it’s on location and not in the cloud. It’s in the factory or on the robot for instance. Microsoft has an open source codebase for field gateways called the Azure IoT Gateway SDK you can start with.

The second is a cloud gateway, and obviously that one is in the cloud. Microsoft has a codebase for one common cloud gateway function - protocol adaptation available at Azure IoT Protocol Gateway.

Both of these entities exist as a point of communication through which you direct your IoT traffic messages for various reasons.

You’ll also hear the term edge to refer to devices and gateways in the field. The edge is the part of an IoT solution that’s touching the actual things. In the internet of cows, it’s the device hanging on the cow’s collar. In an airliner, it’s all the stuff on the plane itself (which I realize is a confusing scenario since technically those devices may also be in a cloud).

Reasons to Use a Gateway

Some possible reasons gateways exist are…

you need to filter the data. It may be that qualifying data deserves the trip to the cloud, but the rest just needs to be archived to local mass storage or even completely ignored.

you need to aggregate the data. Your messages may be too granular, and what you really want to send to the cloud is a moving average, a batch of each 1000 messages, a batch of messages every hour, or something else.

you need to react to your data quickly. It doesn’t usually take that long to get to the cloud and back, but then again “long” is relative. If you’re trying to apply the brakes in a vehicle every millisecond counts.

you need to control costs. You can use filtering or aggregation to massage your messages before going to the cloud to reduce your costs, but there may be some other business logic you van apply to the same end.

you have some cross cutting concerns such as message logging, authorization, or security that a gateway can facilitate or enforce.

you need some additional capabilities. Devices that are not IP capable and able to encrypt messages are dependent on a field gateway to get any messages to the cloud. Devices that are able to speak securely to the cloud but are not for some reason capable to using one of the standard IoT protocols (HTTP, AMQP, or MQTT) require either a field gateway or a cloud gateway (such as Azure IoT Protocol Gateway).

Gateway Hardware

What kind of hardware might you end up using for a gateway? Well, the possibilities are very broad. It could be anything from a Raspberry Pi to a very expensive, dedicated gateway system.

Intel has a helpful article about field gateways and the hardware they offer. Dell has a product called the Edge Gateway 5000 that looks to me to be a pretty solid solution too.

Also, Azure maintains a big catalog of certified hardware including gateways that might be the most helpful resource.

Closing

There’s certainly a lot more about gateways to know, but I’ll leave this here now in case it helps you out.

TIL Something About Bot Middleware

PREAMBLE: I am trying to blog about the little things now. The idea is partly the reason why so many technical blogs exist - it’s a place for me to record things I’ll need to recall later. But modern search engines are good enough, that you just might make it to this blog post to answer a question that’s burning a hole in your brain right now and that’s awesome. I know I love it when I get a simple, concise, and sensible explanation of something I’m trying to figure out.

MORE PRE-RAMBLE: So, I’ve sort of drifted into bot territory. That is, I didn’t initially get extremely excited about the concept of chat bots. It seemed silly. I have since been convinced of their big business value and have really enjoyed learning how to embrace the Node.js SDK for Microsoft’s Bot Framework.

Recently, I realized that the very best way to learn about the SDK is not to search online for docs or posts, but to go straight to the source, and when you get there, look specificallly for the /core/lib/botbuilder.d.ts file.

That file is a treasure trove of useful comments directly decorating the methods, interfaces, and properties of your bot. It’s great that the bot is written in TypeScript, because that means this source code contains a lot of documenting types that not only made it easier for the team to developer this, but now make it easier for us to read it as well.

Tonight I was specifically wondering about something. I had seen middleware components for bots using property values of botbuilder and send, but then I saw receive and wondered what every possible property was and specifically what they did.

I discovered that in fact botbuilder, send, and receive are the only possible property values there. Let me drop that snippet of the source code here, so you can see how well documented those are…

/** |

The IMiddlewareMap is an interface, which is a TypeScript concept. That’s not in raw JavaScript. TypeScript does interfaces right, because they’re not actually enforced on objects that implements them (we are, afterall, talking about JavaScript where pretty much nothing is enforced). Rahter, they’re an indication of intent - as in “I intend for my object to conform to the IMiddlewareMap interface.”

That means that at design time (when you’re typing the code in your IDE), you get good information back about whether what you’re typing lines up with what you said this object is expected to be.

So that’s just one little thing I learned tonight wrapped up with all kinds of preamble, pre-ramble, and other words. Hope it helps. Happy hacking.

Learning Blockchain

I’ve been reading and studying a bit about blockchain recently and am extremely intrigued.

And now, I’ll attempt to explain what blockchain is in brief. Not because you might need to know, but because I usually try to explain something when I’m trying to understand it myself.

Blockchain is a structure and algorithm for storing and accessing data such that data records (transactions or blocks) are hashed and strung together link a linked list data structure. The linking achieved by each block in the chain including as a part of its definition (and thus as a part of its hash) the hash of the previous block in the chain.

So I guess it’s like carrying a little piece (granted a uniquely identifying piece) of history - the hash - along in every transaction.

It’s not unwieldy because each block on has to worry about one extra hash - a tiny piece of data actually.

The benefit, though, is that if a bad guy goes back and modifies one of the records in an attempt to give himself an advantage of some kind in the data, he invalidates every subsequent block.

Because this chain of data is entirely valid or entirely invalid, it is easy for a big group of people to share the entire thing (or even just the last record since it is known to be valid) and all agree on every single change to it.

I watched a TED Talk on the subject and hearing Don Tapscott provide some potential applications of a blockchain really helped to solidify my understanding.

One of his examples was these companies like Uber and Airbnb, which are supposedly highly decentralized and peer-to-peer. They’re not really though, because you still end up with a single company in the middle acting not only as the app developer and facilitator, but more importantly acting as the central source not only all business logic, but also all data and all trust.

In my current understanding, were blockchain to be implemented today in peer-to-peer businesses like these, it would not spell the end of a company like Airbnb. Rather it would mean that their role would be reduced to that of a service provider and not a trust bank. Each stay would be a transaction between a traveler and a host as it is today, but the exchange of dollars and (arguably as important) the exchange of reputation would be direct transactions between two parties.

In addition to a basic blockchain where static values are the content of each block, you have Etherium. This Canadian organization has devised a construct that apparently instead of building up a sort of database of blocks, it builds up a virtual computer. Their website describes it well calling it a decentralized platform that runs smart contracts: applications that run exactly as programmed without any possibility of downtime, censorship, fraud, or third party interference. As I understand it currently, it’s as if each block is not just static data, but rather logic. It can be used to creates rules such as “the 3rd day of each month I transfer $30 to party B”. Check out this reddit post for some well worded explanations on Etherium.

If you have an Azure account, you can already play with Etherium and some other blockchain providers. That’s exactly what I’m doing :)

Comment below if you’re playing with this and want to help me and others come to understand it better.

Cabin Escape

The Idea

I worked together with a few fine folks from my team on a very fun hackathon project, and I want to tell you about it.

The team was myself (@codefoster), Jennifer Marsman (@jennifermarsman), Hao Luo (@howlowck), Kwadwo Nyarko (@kjnyarko), and Doris Chen (@doristchen).

Here’s our team…

The hackathon was themed on some relatively new products - namely Cognitive Services and the Bot Framework. Additionally, some members of the team were looking for some opportunity to fine tune their Azure Functions skills, so we went looking for an idea that included them all.

I’ve been mulling around the idea of using some of these technologies to implement an escape room, which as you may know are very popular nowadays. If you haven’t played an escape room, perhaps you want to find one nearby and give it a try. An escape room is essentially a physical room that you and a few friends enter and are tasked with exiting in a set amount of time.

Exiting, however, is a puzzle.

Our escape room project is called Cabin Escape and the setting is an airplane cabin.

Game Play

Players start the game standing in a dark room with a loud, annoying siren and a flashing light. The setting makes it obvious that the plane has just crash landed and the players’ job is to get out.

Players look around in haste, motivated by the siren, and discover a computer terminal. The terminal has some basic information on the screen that introduces itself as CAI (pronounced like kite without the t) - the Central Airplane Intelligence system.

A couple of queries to CAI about her capabilites reveal that the setting is in the future and that she is capable of understanding natural language. And as it turns out, the first task of silencing the alarm is simply a matter of asking CAI to silence the alarm.

What the players don’t know is that the ultimate goal is to discover that the door will not open until the passenger manifest is “validated,” and CAI will not validate the manifest until all passengers are in their assigned seats. The only problem is that passengers don’t know what their assigned seats are.

The task then becomes a matter of finding all of the hidden boarding passes that associate passengers with their seats. Once the last boarding pass is located and the last passenger takes his seat, cameras installed in the seat backs finish reporting to the system that the passenger manifest is validated and the exit door opens.

Architecture

Architectures of old were almost invariably n-tiered. We software developers are all intimately familiar with that pattern. Times they are a changing! An n-tier monolithic architecture may accomplish your feat, but it won’t scale in a modern cloud very well.

The architecture for cabin escape uses a smattering of platform offerings. What does that mean? It means that we’re not going to ask Azure to give us one or more computers and then install our code on them. Instead, we’re going to compose our application out of a number of higher level services that are each indepedent of one another.

Let’s take a look at an overall architecture diagram.

In Azure, we’re using stateless and serverless Azure Functions for business logic. This is quite a paradigm shift from classic web services are often implemented as an API.

API’s map to nodes (servers) and whether you see it or not, when your application is running, you are effectively renting that node.

Functions, on the other hand do not conceptually map to servers. Obviously, they are still running on servers, but you don’t have to be in the business of declaring how Functions’ nodes scale up and down. They handle that implicitly. You also don’t pay for nodes when your function is not actually executing.

The difficult part in my opinion is the conceptual change where with a serverless architecture, your business logic is split out into multiple functions. At first it’s jarring. Eventually, though you start to understand why it’s advantagous.

If any given chunk of business logic ends up being triggered a lot for some reason and some other chunk doesn’t, then dividing those chunks of logic in different functions allows one to scale while the other doesn’t.

It also starts to feel good from an encapsulation stand point.

Besides Functions, our diagram contains a DocumentDB database for state, a bot using the Bot Framework, LUIS for natural language recognition, and some IoT devices installed in the plane - some of which use cameras.

Cameras and Cognitive Services

The camera module is developed with Microsoft Cognitive Services, Azure functions, Node.js, and Typescript. In the module, it performs face training, face detection, identification, and as well as notification to Azure function service. The module determines if the right person is seated or not, then the notification will send back to Azure function service and then the controller decides the further action.

The following digrams describes the interaction between the Azure fuctions services, Microsoft cognitive services, Node server prcessiong and client.

Cloud Intelligence and Storage

We use Azure Functions to update and retrieve the state of the game. Our Azure Functions are stateless, however we keep the state of every game stored in DocumentDB. In our design, every Cabin Escape room has its own document in the state collection. For the purpose of this project, we have one escape room that has id ‘officialGameState’.

We started by creating a ‘GameState’ DocumentDB database, and then creating a ‘state’ collection. This is pretty straight forward to do in the Azure portal. Keep in mind you’ll need a DocumentDB account created before you create the database and collection.

After setting up the database, we wrote our Azure Functions. We have five functions used to update the game state, communicate with the interactive console (Central Airplane Intelligence - Cai for short), and control the various systems in the plane.Azure Functions can be triggered in various ways, ranging from timed triggers to blob triggers. Our trigger based functions were either HTTP or timer based. Along with triggers, Azure Function can accept various inputs and outputs configured as bindings. Below are the functions in our cabinescape function application.

- GamePulse:

* Retrieves the state of the plane alarm, exit door, smoke, overhead bins and sends commands to a raspberry piece

* Triggerd by a timer

* Inputs from DocumentDB

- Environment:

* Updates the state of oxygen and pressure

* Triggered by a timer

* Inputs from DocumentDB

* Outputs to DocumentDB

- SeatPlayer:

* Checks to see if every player is in their seat

* Triggerd by HTTP request

* Inputs from DocumentDB

* Outputs to DocumentDB and HTTP response

- StartGame:

* Initializes the state of a new game

* Triggered by HTTP request

* Outputs to DocumentDB and HTTP response

- State:

* Update the state of the game

* Triggered by HTTP request

* Inputs from DocumentDB

* Ouputs to DocumentDB

A limitation we encountered with timer based triggers is the inability to turn them on or off at will. Our timer based functions are on by default, and are triggerd based on an interval (defined with a cron expression).

In reality, a game is not being played 24/7. Ideally, we want the timer based functions triggered on when a game starts, and continue on an time interval until the game end condition is met.

The Controller

An escape room is really just a ton of digital flags all over the room that either inquire or assert the binary value of some feature.

- Is the lavatory door open (inquire)

- Turn the smoke machine on (assert)

- Is the HVAC switch in the cockpit on? (inquire)

- Turn the HVAC on (assert)

It’s quite simply a set of inputs and outputs, and their coordination is a logic problem.

All of these logic bits, however, have to exist in real life - what I like to call meat space, and that’s the job of the controller. It’s one thing to change a digital flag saying that the door should be open, but it’s quite another to actually open a door.

The contoller in our solution is a Raspberry Pi 3 with a Node.js that does two things: 1) it reads and writes theh logical values of the GPIO pins and 2) it dynamically creates an API for all of those flags so they can be manipulated from our solution in the cloud.

To scope this project to a 3-day hackathon, the various outputs are going to be represented with LEDs instead of real motors and stuff. It’s meat space, but just barely. It does give everyone a visual on what’s going on in the fictional airplane.

The Central Airplane Intelligence (Cai)

This is a unique “Escape the Room” concept in that it requires a mixture of physical clues in the real world and virtual interaction with a bot. For example, when the team first enters the room, the plane has just “crashed” so there is an alarm beeping. This is pretty annoying, so people are highly motivated to figure out how to turn it off quickly. A lone console is at the front of the airplane, and the players can interact with it.

One of the biggest issues with bots is discoverability: how to figure out what the bot can do. Therefore, good bot design is to greet the user with some examples of what the bot can accomplish. In our case, the bot is able to respond to many different types of questions, which are mapped to our LUIS intents:

- What is the plane’s current status overall?

- How do I fix the HVAC system?

- What is the flight attendant code?

- How do I get out of here?

- How do I unlock the cockpit door?

- How do I open the overhead bins?

- How do we clear all this smoke?

- What is the oxygen level?

- How do I disable the alarm?

The bot (named “CAI” for Central Airplane Intelligence) was implemented in C# using LUIS. The code repository can be found at https://github.com/cabinescape/EscapeTheAirplaneBot.

A Developer Community in Boise, Idaho

I’m a sailor. I don’t sail much right now, because I don’t have time, but I still identify as a sailor.

One of the best things about sailing is the marina community. Folks on the docks are amazingly helpful and friendly. You rarely come in to dock without other boaters offering to grab a line and help you land.

There’s good community in software development too. Sure, there are some bad eggs, but overall, the development community is strong. When I meet new developers trying to learn or new graduates trying to land a job, I always recommend they find a few local meetups to visit. Then pick one or two and attend every month.

Before long, they’re rubbing shoulders with other developers, learning things, figuring out what the web front-end flavor of the week is, and even finding new job roles.

Boise, Idaho has a strong developer community in the Boise .NET Developers User Group (NETDUG).

I fly out to Boise now and then to teach and learn from these fine folks who are fortunate enough to live and work in a beautiful area of the country while still working software development jobs.

Last night I talked to the group about the Microsoft Bot Framework in a session I called Bring on the Bots.

As I told the group, I was none too excited about bots when they were first mentioned at Build 2016, but I’ve since seen the future and realized that digitizing conversation is going to be a big part.

So, here’s to all the software developers in Boise and specifically those in NETDUG. And here’s to NETDUG leaders like @TheScottNichols and @brianlagunas.

If you’re anywhere near Boise, Idaho in March, do know that the Boise Code Camp is a real blast and pretty much a requisite for coders of all breed.

There’s also a Visual Studio 2017 Launch Party the morning of March 9 at the Microsoft office that you’re all welcome too. Hurry because space is limited.