Posts tagged with "messaging"

Azure Options for Stream Processing

Overview

If you’re not already familiar with the concept of data streaming and the resources available in Azure on the topic, then please visit my article Data Streaming at Scale in Azure and review that first.

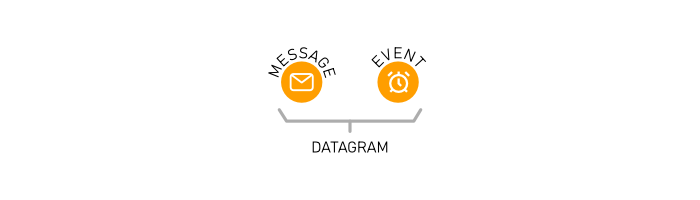

In short, data streaming is the movement of datagrams (events and messages) as a component of a software solution, and Azure offers Storage Queues, Service Bus Queues, Service Bus Topics, Event Hubs, and Event Grid as complementary components of a robust solution.

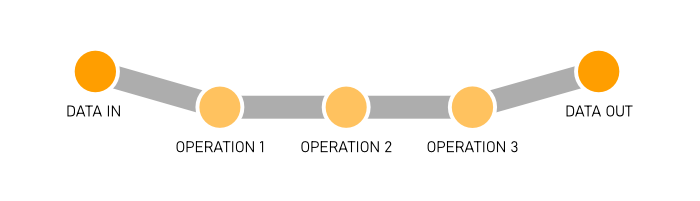

Data streaming is a pipeline, and very generally a pipeline looks like this…

Data comes into our solution, we perform operations to it, and then it reaches some terminal state - storage, a BI dashboard, or whatever.

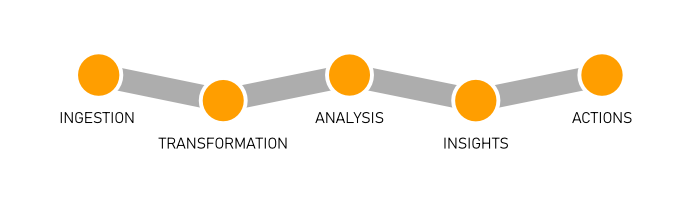

More specifically, however, in many enterprise scenarios that pipeline looks more like this…

Ingestion

Ingestion is simply getting data into the system. This is, often times, moving bits from the edge (your location) to the cloud.

A natural fit for the ingestion step is Event Hubs. It’s excellent at that. Depending on the nature of the datagrams, however, you could use Service Bus Queues or Topics. You could use Storage Queues. You could even just use a Function with an HTTP trigger or a traditional web service if HTTP works in your case. There are a lot of ways to get data into the cloud, but there are only so many protocols.

Transformation

Transformation is merely changing the data shape to conform to the needs of the solution. It’s extremely common since systems that originate data tend to be as verbose as possible to make sure all information is captured.

One of the first tools a pipeline might employ is Azure Stream Analytics (ASA). ASA is good at transforming data and performing limited analysis. Stream Analytics projects one stream into another that is filtered, averaged, grouped, windowed, or more and it outputs the resulting stream one or more times.

ASA is very good at streams, but you still have to think about whether or not it’s the right job for data transformation. Often times, pumping data streams through a Function does the job, meets your solution’s performance requirements, and may make for a more friendly development environment and an easier management and operations story.

Analysis, Insights, and Actions

Now I’m going to lump the remaining three into one category. They’re often (I’m generalizing) solved with processing of some kind.

Analysis is simply Study the data in various ways, insights are figuring out what the data means to future business, and then actions are the concrete steps we take to steer the business (guided by the insights) to effect positive change.

These steps require either some kind of managed integration (like Logic Apps or Flow) or a custom point of compute that gets triggered every time a datagram is picked up.

This custom point of compute could be your own process hosted either on a virtual machine or in a container or it could be an Azure Function.

The bulk of data analysis in years past has been retrospective - data is recorded and some weeks, months, or even years later, data scientists get a chance to look at it in creative ways to see what they can learn. That’s not good enough for modern business, which moves faster and demands more. We need to do analysis on the fly.

Functions

An Azure Function is simply a function written in your language of choice, deployed to the cloud, possibly wired up to a variety of standard inputs and outputs, and triggered somehow so that the function runs when it’s supposed to.

Any given Function might be a serial component of your data pipeline so that every datagram that comes through passes through it. Or it might be a parallel branch that fires without affecting your main pipeline.

Statelessness

Functions are stateless.

Imagine meeting with a person that has no memory, and then meeting with them again the next day. You’d have to bring with you to that meeting absolutely all of the context of whatever you were working on - including introductions!

If you were in the habit of meeting with people like that, you would be wise to make a practice of agreeing with them of a storage location where you could record everything you worked on. Then you would have to actually bring all of the paperwork with you each time.

Functions are like that. Each time you call them it’s a fresh call.

If you write a Function that doesn’t need any state - say to add a timestamp to any message it receives - then you’re as good as done. If you do need state, however, you have a couple of options.

State

(storage)

(durable functions)

(durable entities)

Scaling Strategy

The whole point of Functions is scale, so you’d better get a deep understanding of Functions’ behavior when it comes to scaling. The Azure Functions scale and hosting article on Microsoft docs is an excellent resource.

If you’re using a trigger to integrate with one of the data streaming services we’re talking about, you’re using a non-HTTP trigger, so pay attention to the note under Understanding scaling behaviors. In that case, new instances are allocated at most every 30 seconds.

If you’re expecting your application to start out with a huge ingress of messages and require a dozen instances right off the bat, keep in mind that it won’t actually reach that scale level for 6 minutes (12 x 30s).

Triggers

Most of the work of wiring your data stream up to Functions happens in the trigger. The trigger is the code that determines just how Functions gets new messages. It contains a few configuration options that attempt to fine tune that behavior, but at the end of the day if that behavior is not what you want, you’ll have to rely on another trigger, since custom triggers are not supported.

You might trigger your Function with a clock so that it fires every 15 minutes, or you might trigger it whenever a database record is created, or as in the topic at hand, whenever a datagram is created in your messaging pipeline.

Let’s focus in here on Functions that are reacting to our data streaming services: Storage Queues, Service Bus Queues, Service Bus Topics, and Event Hubs.

Storage Queue Trigger

The Storage Queue Trigger is as simple as they come. You just wire it up to your Storage Queue by giving it your Queue’s name and connection string. The trigger gives you a few metadata properties, though, that you can use to inspect incoming messages. Here they are…

QueueTriggergives you the payload of the message (if it’s a string)DequeueCounttells you how many times a message has been pulled out of the queue andExpirationTimetells you when the message expiresIdthat gives you a unique message ID.InsertionTimetells you exactly when the message made it into the queueNextVisibleTimeis the next time the message will be visiblePopReceiptgives you the message’s “pop receipt” (a pop receipt is like a handle on a message so you can pop it later)

There are certain behaviors baked into all Function triggers. You have to study your trigger before you use it, so you know how it’s going to behave correctly and if you can configure it otherwise.

One of the behaviors of the Queue trigger is retrying. If your Function pulls a message off of the queue and fails for some reason, it keeps trying up to 5 tries total. After that, it adds a new message to the queue with with “poison” appended to the name, so you can log it or use some other logic. Messages are retried with what’s called an exponential back-off. That means the first time it retries a failed message it might wait 10 seconds, the next time it waits 30, then 1 minute, then 10. I don’t know what the actual values are, but that’s the idea.

The Queue trigger also has some particular behavior with regard to batching and concurrency. If the trigger finds a few messages in the queue, it will grab them all in a batch and then use multiple Function instances to process them. It’s very important to understand what the trigger is doing here, because there’s a decent chance your solution will benefit from some optimization. You can configure things like the batch size and threshold in the trigger configuration (in the host.json).

The same Queue trigger can also be configured as an output binding in Functions so you can write queue messages as a result of your Function code.

I covered the Storage Queue trigger here in pretty good detail, but the remaining will have a lot in common conceptually.

Service Bus Trigger

The Service Bus Trigger takes care of triggering a Function when a new message lands in your Service Bus Queue or Topic. There are plenty of nuances that we’ve uncovered in this trigger, though, so it’s worth spending some time looking at the trigger’s behavior in various configurations.

Take a look at the metadata properties that you get inside a Function that’s been triggered by the Service Bus Trigger…

- DeliveryCount

- DeadLetterSource

- ExpiresAtUtc

- EnqueuedTimeUtc

- MessageId

- ContentType

- ReplyTo

- SequenceNumber

- To

- Label

- CorrelationId

You can read about all of those properties in the docs, but I’ll highlight a couple.

(highlight a couple)

(session enabled queues and subscriptions including the not-necessarily-intuitive scaling behavior around it)

(reference this)

Event Hub Trigger

(properties)

(differentiate from service bus behavior)

Custom Process

(containers)

(reactive-x)

Measuring Latency

link to the other blog post about measuring latency

Data Streaming at Scale in Azure

Overview

Many modern enterprise applications start with data generated by real world events like human interactions or regular sensor readings, and it’s often the modern developer’s job to design a system that will capture that data, move it quickly through some data pipeline, and then turn it into real insights that help the enterprise make decisions to improve business.

I’m going to generalize and call this concept data streaming. I’ll focus on streaming and will not cover things like data persistence or data analysis. I’m talking about the services that facilitate getting and keeping your data moving and performing operations on it while it’s in transit.

Data streaming is an extremely common solution component, and there’s a myriad of software services that exist to fulfill it. In fact, there are so many tools, that it’s often difficult to know where to start. But fear not. The whole point of this article is to introduce you to Azure’s data streaming services.

Datagrams

When you’re streaming data, the payload is up to you. It might be very small like a heartbeat or very large like an entire blob. Often, streaming data is made up of messages or events. In this article, I’ll use the term datagram (lifted from Clemens Vasters’ article) to refer abstractly to any payload whether you would consider it a message or an event.

What exactly is the difference between an event and a message. The official Azure documentation contains an article called Choose between Azure messaging services - Event Grid, Event Hubs, and Service Bus that does a great job with explaining this, so read that first, and then here’s my take.

An event is a bit like a system level notification. You and I get notifications on our mobile devices because we want to know what’s going on, right?

The battery level just dropped to 15%. I want to know that so I can plug it in.

I just got a text message from Terry. I’ve been waiting to hear from her, so that’s significant.

Somebody outbid me on that auction item. I need to consider raising my bid.

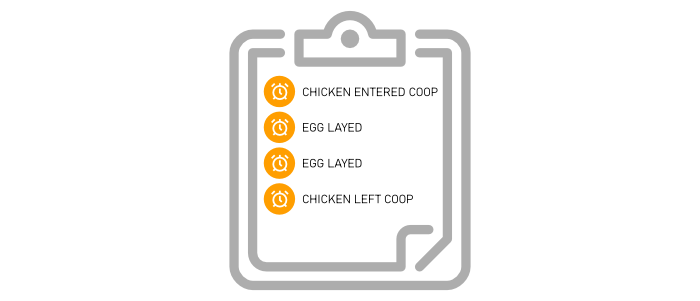

I might want events to be raised even if I don’t plan to respond to them right away too, because I might want to go back and study all of those events over time to make sense of them.

A set of events over time looks a bit like a history book, doesn’t it? You could react in real time as events occur, or you could get a list of all of last week’s events and you could essentially replay the entire week. Or you could do both!

The battery level event example I brought up is a pretty simply event, right? Sometimes, however, events are more complicated and domain-specific, like “Your battery level is 5% less than your average level this time of day.” That kind of event takes some state tracking and data analysis. It’s really helpful though.

This is what I call cascading events. The first-level events are somewhat raw - mostly just sensor readings or low-level events that have fired. The next level is some fusion or aggregation of those events that’s higher level and often times more meaningful to an end user or business user - what we might call domain events. And, of course, events can cascade past 2 levels, and at the last level, it’s common to raise a notification - on a mobile device, a dashboard, or an email inbox.

Messages are different though.

Messages are more self-contained packages that relay some unit of information or a command complete with the details of that command.

I used the analogy of a history book to describe all of last week’s events. I suppose all of last week’s messages would be more like a playbook or a transaction log. Notice that my sample messages are more present tense and directive. That’s fairly typical for messages.

One of the defining characteristics of a message is that there is a coupling between the message producer and the consumer - that is, the producer has some expectation or even reliance on what the consumer will do with it. Clemens rightly states that with events “the coupling is very loose, and removing these consumers doesn’t impact the source application’s functional integrity”. So, I can use that as a test. If I stop subscribing to the produced datagrams, will something break? If so, you’re dealing with messages as opposed to events.

Data Streaming Services in Azure

Let’s start with Azure’s data streaming services.

Storage Queues

Not to be confused with Service Bus Queues, Azure’s Storage Queues are perhaps the most primitive and certainly the oldest queue structure available.

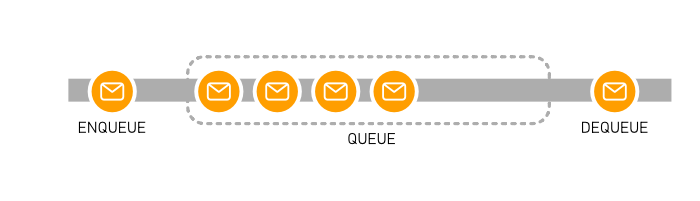

In case you’re not familiar with the core concept of a queue, it would be good to explain that. A queue is a data structure that follows a first-in first-out (FIFO) access pattern - meaning that if you put Thing A, Thing B, and then Thing C in (in that order), and then you ask the queue for its next item, it will give you Thing A - first in… first out.

If you studied computer science and you weren’t sleeping during your Data Structures class, then you already know about queues.

Queues are extremely handy in cloud-first applications where it’s common to use one working process to set aside tasks for one or more other working processes to pick up and fulfill. This decoupling of task production from task consumption is a pinnacle concept in fulfilling the elasticity that cloud applications promise. Simply scaling your consumers up and down with your load allows you to pay exactly what you should and no more.

Storage Queues are not as robust as some of the other data streaming services in Azure, but sometimes they are simply all you need. Also, they’re delightfully inexpensive.

Service Bus Queues

Service Bus Queues are newer and far more robust than Storage Queues. There are some significant advantages including the ability to guarantee message ordering (there are some edge cases where Storage Queues can get messages out of order), role-based access, the use of the AMQP protocol instead of just HTTP, and extensibility.

To study the finer differences between Storage Queues and Service Bus Queues, read Storage queues and Service Bus queues - compared and contrasted.

Service Bus Topics

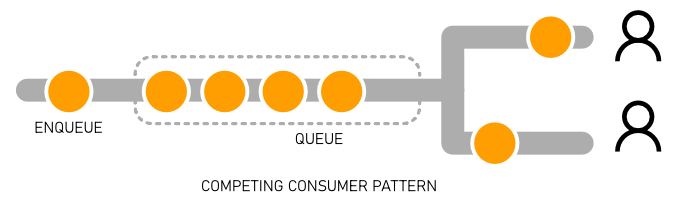

Queues are the solution when you want one and only one worker to take a message out of the queue and process it. Think about incoming pizza orders where multiple workers are tasked with adding toppings. If one worker puts the anchovies on a pizza then it’s done, and the other workers should leave it alone.

Topics are quite different. Topics are the solution when you have messages that are occurring and one or more parties are interested. Think about a magazine subscription where it’s produced one time, but multiple people want to receive a copy. You often hear this referred to as a “publish/subscribe” pattern or simply “pubsub”. In this case, messages are pushed into a topic and zero to many parties are registered as being interested in that topic. The moment a new messages lands, the interested parties are notified and can do their processing.

When dealing with Service Bus Queues and Topics, you’ll hear some unique semantics. The terms At Most Once, At Least Once, and Exactly Once describe how many times a consumer is guaranteed to see a message and the nuances in how the service handles edge cases such as when it goes down while a message is in processing. Peeking at messages allows you to see what’s in the queue without actually receiving it. Whether consumers are peeking or actually receiving messages, locks are put in place to make sure other consumers leave it alone until it’s done. Finally, deadlettering is supported by Queues and Topics and allows messages to be set aside when, for whatever reason, consumers have been unable to process them.

Event Hubs

Now let’s take another view on processing data and look at events instead of messages.

Event Hub is Azure’s solution for facilitating massive scale event processing. It’s ability to get data into Azure is staggering. The feature page states “millions of events per second”.

My understanding as to the differences between Service Bus and Event Hub has come in waves of understanding. Here’s how I understand it now.

Service Bus (both Queues and Topics) is a bit more of a managed solution. That is, the service is applying some opinion about your solution. Opinions, as you may know, can make a service dramatically easier to implement, but they can also constrain us as solution developers by tying us to specific assumed scenarios.

There are a lot of things that Service Bus does for us that Event Hubs does not attempt to do. It caches datagrams and keeps them for the configured time, but it doesn’t decorate them with any status, remember which have been processed, keep track of how many times a datagram has been accessed (dequeued in Service Bus parlance), lock those that have been looked at by any given consumer, or anything like that. It simply stores datagrams in a buffer and if the time runs out on a datagram in the buffer it drops it.

This puts the onus on an Event Hubs consumer to not only read events, but to figure out which ones have been read already.

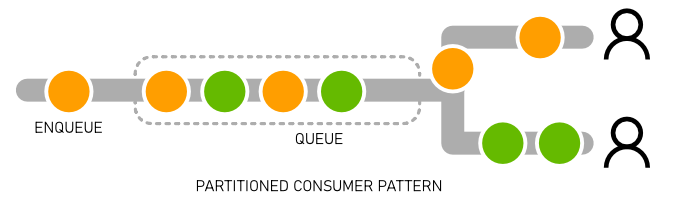

This lack of management switches us from a pattern called competing consumer to one called partitioned consumer. With competing consumers, the service has to spend a bit of time making sure the competition is fair and goes well. With partitioned consumers, there’s just a clean rule regarding who gets what. So, if you can assume that there is no competition in your processing then you don’t need all the cumbersome status checking, dequeue counting, locking, etc. That’s why Event Hubs is super fast.

Event Grid

Event Grid is newer than both Service Bus and Event Hubs, and it may not be readily apparent what its unique value offering is.

Start by reading Clemens Vaster’s excellent article Events, Data Points, and Messages - Choosing the right Azure messaging service for your data.

Clemens calls Event Grid “event distribution” as opposed to “event streaming”. I like that.

Event Grid is a managed Azure platform with deep integration into the whole suite of Azure resources that creates a broad distribution of event publishers and subscribers between Azure services and even into non-Azure services.

Event Grid is not as much of a streaming solution as Event Hubs is. It’s highly performant, but it’s not tuned for the throughput you would expect to see in an Event Hubs solution.

It’s also a bit of a hybrid because it’s designed for events, but it has some of the management and features you find in Service Bus like deadlettering and At Least Once delivery.

Stream Analytics

Together Service Bus, Event Hubs, and Event Grid make up the three primary datagram streaming platform services, but not far behind as far as central streaming services is Stream Analytics. Heck, it even has Stream in the name.

Stream Analytics uses SQL query syntax (modified to add certain streaming semantics like windowing) to analyze data as it’s moving.

Think about being tasked with counting the number of red Volkswagon vehicles that pass by some point on the highway. One crude approach would be to ask vehicles to pull over until a large parking lot were full, then perform the analysis, and then let those cars go while bringing new ones in. The obvious impact to traffic is analogous to the performance impact on a digital project - horrendous.

The better way is let the cars go and just count them up as they move unfettered. This is what Stream Analytics attempts to do.

Measuring Latency

There are many points in the streaming process where delays may be introduced such as while…

- processing something in the producer before a message is sent

- composing a message

- getting the message to the streaming service

- getting the message enqueued and ready for pickup

- getting the message to the consumer

- processing the message

Other Mentionable Azure Data Services

There are a few other services in Azure that have more distant relationships to the concept of data streaming.

Data services and the often-nuanced differences between one another get truly dizzying. I have so much respect for a data expert’s ability to simply choose the right product for a given solution.

I have to mention IoT Edge and IoT Hub. In the wise words of Bret Stateham “IoT is a data problem waiting to happen”. IoT Hub has an Event Hub endpoint that allows it to integrate with other data services in Azure.

Data Lake is a great place to pump all of your structured or unstructured data to figure out what you’ll do with it next. A data lake is what you find at the end of a data stream - just like IRL!

Databricks gives you the easiest Apache Spark cluster ever. With it you get a convenient notebook interface so you can collaborate and iterate on your data analysis strategies. Databricks is certainly a streaming solution, so if you’re wondering why I didn’t compare it more directly next Service Bus and Event Hub, it’s simply because it’s a very different beast and frankly I’m not overly familiar with it.

Power BI is a business intelligence platform for visualizing data back to humans so that key decisions can be made. It’s worth a mention because it’s capable of receiving data streams and even updating visuals in near real-time.

When your data has been ingested, stored, analyzed, and trained, you drop it into SQL Data Warehouse - a column store database for big data analysis. Not much streaming at play at this stage of the solution, but it’s worth a mention.

Conclusion

I did not bring the conclusion and closure to this research into streaming that I would have liked, but I wanted to get all of this work published in case it’s helpful.

If this is your debut into the concept of data streaming, then welcome to a rather interesting and fun corner of computer science.