Posts tagged with "productivity"

Semantic Selections and Why They Matter

I’m a big fan of at least two things in life: writing code and being productive. On matters involving both I get downright giddy, and one such matter is semantic selection. VS Code calls them smart selections, but whatever.

Semantic selection is overlooked by a lot of developers, but it’s a crying shame to overlook something so helpful. It’s like what comedian Brian Regan says about getting to the eye doctor.

Semantic selection is a way to select the text of your code using the keyboard. Instead of treating every character the same, however, semantic selection uses insights regarding the symbols and other code constructs to (usually) select what you intended to select with far fewer keystrokes.

I used to rely heavily on this feature provided by ReSharper way back when I used heavy IDEs and all ;)

Some may ask why you would depend on the keyboard for making selections when you could just grab the mouse and drag over your characters from start to finish? Well, it’s my strong opinion that going for the mouse in an IDE is pretty much always a compromise - of your values at least but usually of time as well. That journey from keyboard to mouse and back is like a trans-Pacific flight.

I can’t count the number of times I’ve watched over the shoulder as a developer carefully highlighted an entire line of text using the mouse and then jumped back to the keyboard to hit BACKSPACE. Learning the keyboard shortcut for deleting a line of text (

CTRL+SHIFT+Kin VS Code) can shave minutes off your day.

Without features like semantic selection, however, selecting text using the keyboard alone can be arduous. For a long time, we’ve had SHIFT to make selections and CTRL to jump by word, but it’s still time consuming to get your cursor to the start and then to the end of your intended selection.

To fully convey the value of semantic selection, imagine you’re cursor is just after the last n of the currentPerson symbol in this code…

function getPersonData() { |

Now imagine you want change currentPerson to currentContact. With smart selection in VS Code, you would…

- Tap the left arrow one time to get your cursor into the

currentPersonsymbol (instead of on the trailing comma) - Hit

SHIFT+ALT+RIGHT ARROWto expand the selection

Now, notice what got highlighted - just the “Person” part of currentPerson. Smart selection is smart enough to know how camel case variable names work and just grab that. Now simply typing the word Contact will give you what you wanted.

What if you wanted to replace the entire variable name from currentPerson to say nextContact? For that you would simple hit SHIFT+ALT+RIGHT ARROW one more time to expand the selection by one more step. Now the entire variable is highlighted.

One more time to get the entire function signature.

Once more to get the function call.

Once more to get the entire statement on that line and again to get the whole line (which is quite common).

Once more to get the function body.

Once more to get the entire function.

That’s awesome, right?

Integrate this into your routine and shave minutes instead of yaks.

Happy coding!

The World's Quickest API

Sometimes you just need a quick API. Am I right?

I was working on a project recently and needed just that. I needed an API, and I didn’t want to spend a lot of time on it.

One of my strategies for doing this in days of old was to write up some code-first C# entities, reverse engineer the code to create an Entity Framework model, and serve it using OData. It was great and all that stuff is still around… still supported… still getting improved and released, so you could go that way, but that’s not how I made my last “instant API”.

My last one was even easier.

I found a node package called json-server that takes a JSON file and turns it into an API. Done. Period. End of story. A few minutes composing a JSON file if you don’t have one already and then a few lines of code to turn it into an API.

I also often use a node package called localtunnel that opens a local port up to the internet. Now I spend a few minutes writing a JSON file and 20 seconds opening a port and I have myself an API that I can share with the world.

For example. Let’s say I want to write an app for dog walkers.

Here’s some dog data…

{ |

Now let’s turn that into an API stat! I’m going to be thorough with my instructions in case you are new to things like this.

I’ll assume you have Node.js installed.

Create yourself a new folder, navigate to it, and run npm init -y. That creates you a package.json file. Then run touch index.js to create a file to start writing code in.

Now install json-server by running npm i json-server

The

iis short forinstall. As of npm version 5, the--saveargument is not necessary to add this new dependency to thepackage.jsonfile. That happens by default.

Finally, launch that project in your IDE of choice. Mine is VS Code, so I would launch this new project by running code .

Edit the index.js file and add the following code…

const jsonServer = require('json-server') |

Let me describe what’s going on in those few lines of code.

The first line brings in our json-server package.

The second line creates a new server much like you would do if you were using Express.

Lines 3 and 4 inject some middleware, and the rest spins up the server on port 1337.

Note that line 4 points to data.json. This is where your data goes. You can make this simpler by simply specifying a JavaScript object there like this…

server.use(jsonServer.router({dogs: {name"Rover"}})) |

But I discovered that if you use this method, then the data is simply kept in memory and changes are not persisted to a file. If you specify a JSON file, then that file is actually updated with changes and persisted for subsequent runs of the process.

So that’s pretty much all there is to it. You run that using node . and you get a note that the API is running on 1337. Then you can use CURL or Postman or simply your browser to start requesting data with REST calls.

Use http://localhost:1337/dogs to get a list of all dogs.

Use http://localhost:1337/dogs/1 to fetch just the first dog.

Or to create a new dog, use CURL with something like curl localhost:1337/dogs -X POST -d '{ "id":4, "name":"Bob", ...}

Now you have a new API running on localhost, but what if you want to tell the world about it. Or what if you are working on a project with a few developer friends and you want them to have access. You could push your project to the cloud and then point them there, but even easier is to just point them to your machine using a tunneler like ngrok or Local Tunnel. I usually use the latter just because it’s free and easy.

To install Local Tunnel, run npm i -g localtunnel.

To open up port 1337 to the world use lt -p 1337 -s dogsapi and then point your developer friend that’s working on the UI to fetch dogs using http://dogsapi.localtunnel.me/dogs.

Be kind though. You set your API up in about 4 minutes and your UI dev probably hasn’t gotten XCode running yet. :)

NPM Link

My buddy Jason Young (@ytechie) asked a question the other day that reminded me of a Node trick I learned sometime ago and remember getting pretty excited about.

First, let’s define the problem.

If you are working on a Node project and you want to include an npm package as a dependency, you just install it, require it, and then do a fist pump.

If, however, you are in one of the following scenarios…

You find a great package on npm, but it’s not exactly what you want, so you fork it on GitHub and then modify it locally.

You are working on a new awesome sauce npm package, but it’s not done yet. But you want to include it in a node project to test it while you work on it.

…then you’re in a pickle.

The pickle is that if in your consuming app, you’ve done a npm install my-awesome-package then that’s the version from the public registry.

The question is, how do you use a local version.

There are (at least) two ways to do it.

The first is to check your project (the dependency npm package that you’ve forked or you’re working on) in to GitHub and then install it in your consuming project using npm install owner/repo where owner is your GitHub account. BTW, you might want to npm remove my-awesome-package first to get rid of the one installed from the public registry.

This is a decent strategy and totally appropriate at times. I think it’s appropriate where I’ve forked a package and then want to tell my friend to try my fork even though I’m not ready to publish it to npm yet.

I don’t want to expound on that strategy right now though. I want to talk about npm’s link command (documentation).

The concept is this. 1) You hard link the dependency npm package into your global npm package store, and 2) you hard link that into your consuming project.

It sounds hard, but it’s dead simple. Here’s how…

- At your command line, browse to your dependency package’s directory.

- Run

npm link - Browse to your consuming project’s directory.

- Uninstall the existing package if necessary using

npm remove my-awesome-package - Finally, run

npm link my-awesome-package

You’ll notice that the link isn’t instant and that will cause you to suspect that it’s doing more than just creating a hard link for you, and you’re right. It’s doing a full package install (and a build if necessary) of the project.

The cool part is that since the project directory is hard linked, you can open my-awesome-package in a new IDE instance and work away on it and when you run the consuming project, you’ll always have the latest changes.

And that’s that. I use this trick all the time now that I know it. Before I knew it, you’d see version counts like 1.0.87 in my published packages because I would roll the version and republish after every change. Oh, the futility!

The inverse is just as easy. When the latest my-awesome-package has been published to npm and you’re ready to use it, just visit your consuming package and run npm unlink my-awesome-package and then npm install my-awesome-package. Then go to your dependency package and simply run npm unlink. Done.

Growth Mindset

If you’re tuned in to technical topics, then you’ve likely heard my CEO Satya Nadella use the phrase Growth Mindset a few times.

I’ve been thinking about this phrase recently and realized that the first time I hear a phrase like this, my brain attempts to formulate a definition or understanding of it and then I have a tendency to stick to that definition every subsequent time I hear it even if it’s not entirely accurate or entirely what the speaker intended.

I wonder them, what does “growth mindset” actually mean or what does Satya intend it to mean when he uses it to describe Microsoft?

After some pondering and reading, I’ve concluded that it means (to me at least) that a person…

- is always ready to learn something new

- assumes that their understanding of any topic can can use some refinement regardless of how well-formulated it is already

- defines their success as having learned something new as opposed to having shown off what they already know

- constantly measures results against efforts as is ready to adjust efforts to maximize results

Hopefully that’s not too esoteric.

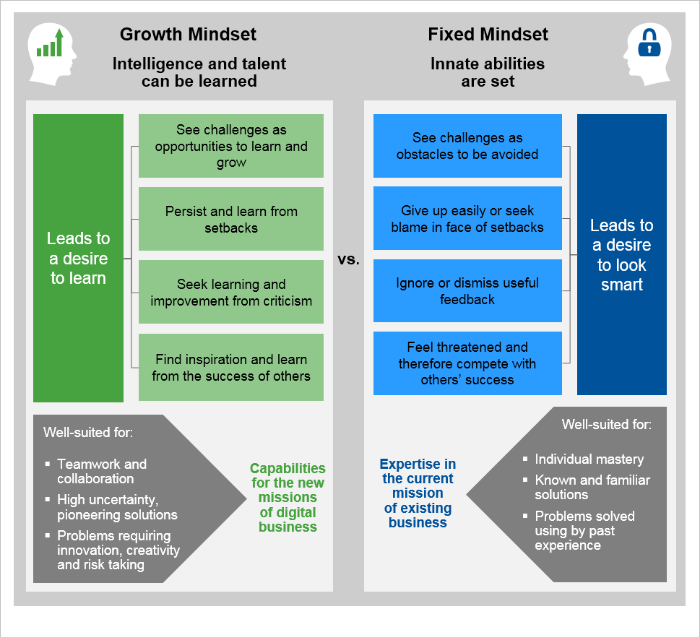

Gartner recently published an article on the topic where they used Microsoft as a positive example. In their article, they show this chart…

This graphic appears to indicate (and I would agree) that the defining characteristic of someone with a growth mindset is a desire to learn over a desire to look smart.

Most people would claim to value learning, but that’s the easy part. the hard part is that doing so often necessitates sacrificing looking smart… and that’s not so easy.

I have an example from my own life.

I used to work for Gateway Computers. It was a long time ago in 1998 when Gateway was just about the most likely choice for a home computer. I worked in a call center in Colorado Springs, CO.

At one point, I worked 4 12-hour days (Thursday through Sunday) per week and I remember being intellectually exhausted after about 8 hours of visualizing and solving users’ computer woes.

Side Story: At one point in my tenure at Gateway, I joined a group formed to experiment with what was called (if I remember right) Customer Chat Support (CCS). CCS was a strategy to increase our call center’s ability to handle support calls by having a moderator classify calls and send them to various rooms with up to 5 others and a single Gateway technician. Sometimes I was the moderator, but usually I was the tech and it was my job to solve 5 problems at once!

At another point in time, I was on the Executive Response Committee (ERC) and I responded to folks who had been courageous enough to write directly to Ted Waitt - the then CEO of the company. I talked to people with all kinds of troubles.

Behind the headsets in a tech support call center live together, as you might imagine, a lot of geeks. When the geeks were on break, we would chat and I quickly realized that there were two types: those who were attempting to establish that they were very knowledgeable, and those who were learning.

I didn’t realize it at the time, but I was learning about growth mindset and deciding that I would attempt personally to eschew the status of “one who knows,” and attempt instead to ask questions, discover, learn, and grow. There’s so little the guru status actually provides you anyway that is not an illusion.

John Wooden said, “Be more concerned with your character than your reputation, because your character is what you really are, while your reputation is merely what others think you are.” Similarly, Dwight L. Moody said “If I take care of my character, my reputation will take care of itself.”

Like someone who’s seeking to advance his character and giving up his reputation, one who genuinely seeks growth of knowledge will end up further along.

I’ve heard it said that - “Humility is not a lowly view of yourself. It’s a right view of yourself.”

We need to be ready to admit when we are knowledgeable about something, but just as ready to admit when we are not. It turns out that just being honest (something we hope we learned in kindergarten) about what we know or what we are capable of is the best tack.

I hope that’s encouraging and if necessary I hope it’s challenging too.

5 More Posts

Blogging is a chore. Writing in general is a chore. It’s not easy to formulate thoughts and put them into words. I have a ton of respect for prolific authors that generate volumes in their lifetime, because that’s a phenominal feat.

I just looked at my blog statistics and I’m woefully low for the year. I have only blogged 14 times in 2016 so far. That’s barely over once per month, whereas I should be creating a post at least every week. I started off in 2012 with 87+ posts, and have tapered down to last year (2015) at only 19. I’m ashamed.

My desire is to write though. I write for you, my reader, but I certainly write for me too. It’s a great discipline and as any technical blog author knows, the main reason we post is to remind our future selves how to do something we at one point knew. Tell me you haven’t searched online for a technical answer only to find your own post from 2 years ago!

So here’s the deal. It’s currently December 8. There are about 23 days left in the year. My goal is to post at least 5 more times. Wait, this posts counts, so 4 more times. Hoorah! That’s completely doable and will mean that I have not digressed from 2015.

Furthermore, it’s my commitment to increase 2017 significantly. I won’t put any official commitments on a number out there, but there’s not reason I can’t maintain a weekly cadence and produce >52 posts for 2017.

Here we go!

A Month of Soylent

I’ve been one month now on an (almost) exclusively Soylent diet.

Soylent is a drink engineered to be everything the human body needs for food. They don’t explicitly recommend that a person eats nothing besides Soylent, but they certainly don’t say it can’t be done, and there are plenty of folks that do it.

Soylent is billed as a “healthy, convenient, and affordable food”. In my experience, it’s quite easily all three. I’m pretty excited about this stuff.

Let me start by saying that I’m not a diet guy. I’ve made various attempts in the past to count my caloric intake, but I can’t remember the last time I imposed any kind of constraints on what I ate. In my opinion, counting calories sucks. It’s quite a chore stopping to think about what I’m eating, try to find its rough equivalent in a table somewhere, recording it, and trying to time the intake so that you end the day at the right number.

@kennyspade first introduced me to Soylent a full year ago. He brought the topic up in response to my mention of Mark Zuckerberg wearing the same attire each day to alleviate decision fatigue. Soylent is similar in its ability to eliminate the decisions we make about what we eat.

Kenny handed me a bottle of Soylent’s premixed product that they dub version 2.0 to try. I don’t remember being particular taken by the flavor or the texture, but I wasn’t turned off either.

Finally, the beginning of this September, I decided to try Soylent longer term, and I set my sites pretty high. I decided to reduce my diet to almost exclusively Soylent and water.

Soylent is available as a powder or a drink with the former being cheaper and the latter being more convenient. I opted for convenience for now.

I expected to be in for a bit of a personal battle. You see, I’m not that disciplined when it comes to eating. I’m basically just not accustomed to thinking about what I eat. I like sugar, so when candy is offered or available, I take it. If a bowl or a bag is offered, I take a few. I work hard and stay up late, so I drink coffee - perhaps 3 cups a day. And I like rich food in large portions. I was never overly excessive, but I haven’t been thrilled with my fitness either.

Well, it’s October now, so let me fill you in on my September…

Soylent is Tastier than I Thought

I started out not minding the taste, texture, and overall experience of Soylent 2.0 at all, but I ended up, somehow, absolutely loving it. Soylent tastes a bit like the milk left in your bowl after you’ve eaten Frosted Flakes, but a bit less sweet. I have a bottle every 4 hours, and after about 2 I’m really looking forward to the next one… not because I’m starving - I’m not - but because I enjoy the drink. The mouth feel is excellent too. It’s a little bit grainy in a good way, and I much prefer that to the overly thin texture I find in the other (usually milk-based) protein or meal replacement drinks I’ve tasted.

Soylent is Quite Filling

I decided to eat four 400 kcal Soylent drinks each day at 7am, 11am, 3pm, and 7pm. That’s 4 hours between meals and 1600 calories a day. The product is engineered to be 20% of the food a person needs for a day - 20% of the calories and 20% of the vitamins and nutrients - but I wanted to reduce my intake a bit and trim some fat.

After 4 hours of fasting, my stomach is ready for some substance, and whether I take my time drinking a bottle or throw it back in a hurry (in the TSA security line!) it does satisfy. I don’t feel full per say as I pitch the bottle into the recycle bin, but I don’t feel hungry either. I feel perfectly content for at least 2 hours and then start slowly getting hungry as I approach the 4 hour mark.

If I decided on a standard 2000 kcal diet, that would be 5 Soylents a day and a meal every 3 hours. That would be even easier.

Soylent is Easier than I Thought

The entire effort was much easier than I thought. I could hardly call it a battle, actually, as I initially thought it would be. The reason for this I attribute to the simplicity of my rule set…

- I will eat only Soylent and drink only water

- As a single exception to rule 1, if I get too hungry at any point, I’ll allow myself to eat broccoli. You can hardly go wrong with steamed, plain broccoli. It’s very healthy and doesn’t exactly pose a temptation.

- If I have culinary social opportunities (team dinners, family outings, etc.) I’ll go and I’ll engage, but I just won’t eat. Believe it or not, you can still enjoy a conversation across the table from someone without eating. It grinds against habit perhaps, but it works. Admitted, it does take a little bit away from a romantic dinner, but actually, that’s a bit strange isn’t it? For some reason, it’s not as fun eating with someone when you’re doing all the eating. My wife was kind and accommodating though.

In previous attempts to eat well, I had to spend extra time considering each morsel that passed in front of me.

“Should I eat that cracker?”

“Should I eat a cookie?”

“Should I eat another cookie?”

“Just one more pretzel?”

“My own carton of fries or share with my wife?”

But this time it was different. The answer was always the same - “no”.

No soda. No coffee. No cereal. No cookie. No nothing.

No chicken, no carrots, no milk. No to seconds. No to firsts! No nothing. The answer is always no.

That greatly simplifies things and leaves my mind free to really concentrate on the project at hand or on what my 4 year old is trying to say to me.

Eating Includes a Lot of Ceremony

There’s a whole lot of ceremony around food that I wasn’t aware of until I got on the outside of it all.

Several time per day we think about food - we consider our hunger, we decide to eat, we decide what, we decide when, we decide where, we transport, we stand in line or wait on a server, we acquire, we consume, we ask to be excused while we chew and try to talk to others, and finally we clean up the mess on our table and on our hands. And after hardly any time at all, we start thinking about our next meal!

I also neglected to notice just how often we come up with the idea to find coffee. Is there a machine nearby? Is there a Starbucks nearby? And then again with the relatively long process of acquisition and consumption.

Food acquisition is still a cinch compared to ancient times when our food was running around with teeth. But the reality is that times are different and there may just be room for some efficiency improvements.

Recreational Eating Rocks

I wonder if you’re thinking, “Yeah, but I like eating, and I don’t have any desire to give it up.”

I like eating too. September was a bit exceptional, but as a normal course I think it’s a good idea to use Soylent for all of the boring meals - the ones where you just need to put food in the belly - and then truly enjoy all the other meals - the recreational meals. It’s interesting too that my enjoyment of them is inversely proportional to their frequency. A weekly turkey sandwich tastes way better than a daily one.

Soylent is Convenient

There are a number of scenarios that I ran across during my experiment that accentuated the convenience of my new found food.

- Sometimes you don’t have time for breakfast. A Soylent is about as convenient as a bar.

- Same thing when you’re heads-down at work and acquiring lunch feels like a real chore.

- Reducing your diet to a very narrow menu of Soylent and water allows you to experiment with possible food allergies.

- Lunch meetings at work afford ample opportunity to show off bad habits or spill food on your lap. Starting your own lunch at 11:00 and finishing at 11:01 frees you up to focus on colleagues, partners, or the topics at hand.

- When you’re running around town running errands or having fun, stopping at a restaurant doesn’t have to be another thing on your list. Unless, that is, the rest of your family is on something other than a Soylent diet :)

Overall, it’s just nice to be in control of your own daily diet.

The Cost of Engineered Eating

Shelling out the money for a month’s worth of Soylents ($323 for 1600 kcal/day) feels like a lot. Consider, however, that this includes the convenience of the premixed option (a month of powder @1500 kcal/day is ~$180/mo), and may or may not be kinder on your budget than whatever else you were going to eat that month.

Some Other Things I Learned in Soylentember

Although weight loss was not my primary goal in this endeavor, I have lost 5 pounds over 4 weeks. That’s a healthy weight loss rate, and I always have the option to maintain my 1600 calorie diet or bump it up to 2000. Soylent is not a weight loss product - it’s just food, but it makes calorie counting much easier.

Air travel is not easy if you’re doing the liquid version of Soylent, because you can’t take liquid through security. I’m forced to check a bag and make sure that there’s not too much time between when I go through security and when I visit baggage claim on the other end, which is often more than 4 hours. If you like to get to the airport 2 hours early like me, then you are constrained to flights that are roughly 2 hours or less. This is a good reason to get some of the powder version (1.6), which is exactly what I intend to do.

The bottles are recyclable**. **I recommend giving them a quick rinse before finding your nearest recycling bin.

When I get my hands on some powder I intend to mix up a day at a time and then use some of my 2.0 bottles for mixing

I chilled my Soylent bottles in the fridge when I started, because I read that it’s better cold. It is good when it’s cold, but I later discovered that I like it at least as much when it’s room temperature. I think comes back to the texture. For some reason, when it’s cold it feels smoother in the mouth, but I like my Soylent to feel like food in my mouth. It’s more convenient leaving them at room temperature too, because you can just throw the day’s worth of food in your backpack and take off.

Soylent also offers bars that are 250 kcal and I’m told are quite delicious. I like having the option of finer control of caloric intake by adding in this smaller increment.

Going Forward

I’m not 100% certain about how I want to proceed now. Like I mentioned, I expected this to be a chore, and expected to be rather excited about getting back to normal eating when September was over. On the contrary, I quite enjoy my new freedom from food ceremony, and I’m sure I’ll continue supplementing some portion of my days kcal intake at least. I like how easy it is to come up with a daily plan of something like…

- 2 Soylent 2.0 drinks (800 kcal) + 1 bar (250 kcal) + 1 light dinner

- 3 Soylent 2.0 drinks (800 kcal) + 1 big dinner

I’m pretty sure I’ll either do one of those or perhaps just keep on with a fully Soylent diet! We’ll have to see.

VS Code Goes Open

Visual Studio Code is now open source.

Me: What do you think of Visual Studio Code?

Some Dude: It’s awesome. I just wish it were open source.

Me: You need to fork it? Tweak it?

Some Dude: No.

Me: Okay.

I get it. I like open source stuff too.

Realistically, there are few products I have time to fork and fewer still that I have need to fork.

But even when I have no need to fork a project and no intention to submit a pull request any time soon, still I want it to be open source. Why? Because… freedom.

I like closed source products too, actually. Closed source products can be sold. Selling products earns a company money. Companies with money can create big research and development departments that can tinker with stuff and make new, cool stuff. And ultimately, I like new cool stuff.

The best scenario for me, a consumer, though, is when a big company with a big research and development department can afford to make something cool and free and open, because they make money on other products.

Some products (think Adobe Photoshop) are obviously a massive mess of proprietary code that feel right to belong to their parent company. They need the first-party control.

Others, like Code feel more like they belong to the community. That’s how I feel anyway.

**And now I can. Visual Studio Code is officially OSS! **

In case you missed it, Microsoft announced at Connect() 2015 that Code was graduating from preview to beta status and that it would be open sourced.

To see Code’s code comfortably settled into its new home, just head over to github.com/microsoft/vscode. From there, you can clone it, fork it, submit an issue, submit a PR… or look at what the team is working on and who else is involved. You know… you can do all of the GitHub stuff with it.

So there it is. It’s not only free as in “free beer” now, but also as in “free speech”.

The actual announcement is buried in the keynote, so the best way to get the skinny on this announcement, the details, and the implications is to watch the Visual Studio Code session hosted on Connect() Day 2 by @chrisrisner. The panel shows off Code in serious depth. It’s a must-see session if you’re into this stuff.

One of the more exciting things they showed off is actually the second gigantic announcement regarding Code… the addition of extensions to the product, but that’s a big topic for another day and another blog post.

What exactly does the open sourcing of Code mean for you? As I mentioned, you may or may not be interested in ever even viewing the source code for Code. The real gold in this announcement is the fact that Code now belongs to the community. It’s ours. It’s something that we’re all working on together. That’s no trivial matter. Microsoft may have kicked it off and may be a huge contributor to it here forward, but so are you and I.

So whether you’re going to modify the code base, study the code base, or just take advantage of the warm feeling that open source software gives us, you know now that the best light-weight code editor for Windows, Linux, and Mac, is ready for you.

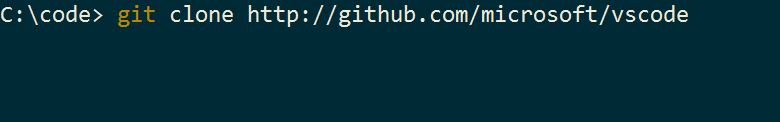

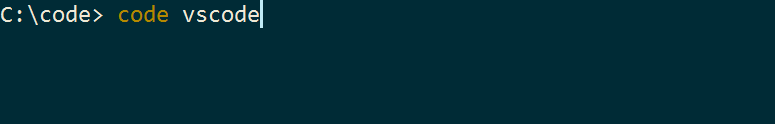

Let’s have a quick look at the code for Code using Code. The official repo is at http://github.com/Microsoft/vscode. So start by cloning that into your local projects folder. My local projects folder is c:\code, so I do this…

Then, you launch that project in Code using…

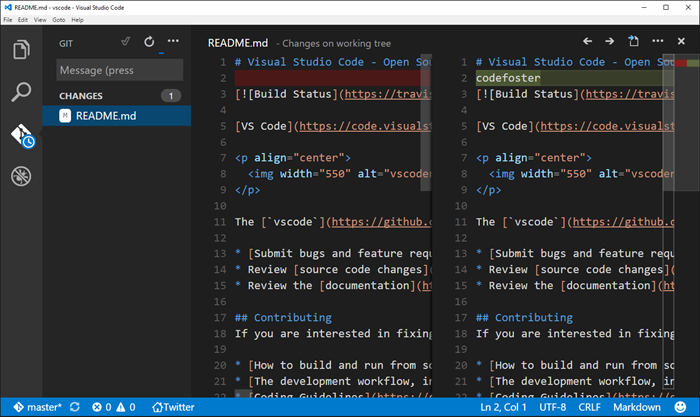

You’ve got it now. So I just added “codefoster” to a readme.md file to simulate a change and then hit CTRL + SHIFT + G to switch to the Git source control section of VS Code, and here’s what I see…

Notice that the changed file is listed on the left and when highlighted the lines that were changed are compared in split panes on the right. Checking this change in would simply involve typing the commit message (above the file list) and then hitting the checkmark.

This interface abstracts away some of the git concepts that tend to intimidate newcomers - things like pushing, pulling, and fetching - with a simpler concept of synchronizing which is accomplished via the circle arrow icon.

It’s important to note that I wouldn’t be able to check this change in here because I don’t have direct access to the VS Code repo. Neither do you most likely. The git workflow for submitting changes to a repo that you don’t have direct access to is called a pull request. I’ll leave the expansion of this topic to other articles online, but in short it’s done by forking the repo, cloning your fork, changing your files, committing and pushing to your fork, and then using github.com to submit a pull request. This is you saying to the original repo owner, “Hey, I made some changes that I think benefit this project. They are in my online repository which I forked from yours. I hereby request you _pull _these changes into the main repository.

It’s quite an easy process for the repo owner and I don’t think a repo owner on earth is opposed to people doing work for them by submitting PR’s. :)

Again, getting involved simply means interacting and collaborating on GitHub. Here’s how…

- Check out the list of issues (there are already over 200 of them as I type this) on microsoft/vscode repo.

- Chime in on the issues by submitting comments.

- Create your own issue. See how.

- Clone the code base using your favorite git tooling or using

git clone https://github.com/microsoft/vscode.giton your command line. That will allow you togit pullanytime you need to get the latest. Having the code means you can browse it whenever you’re wondering how something works. See how. - Fork the code using GitHub if you want to create a copy of the code base in your own GitHub repo. Then you can modify that code base and submit it via a pull request whenever you’re certain you’ve added some value to the project. See how.

And you can chatter about Code as well on Twitter using @Code. As to how they got such an awesome handle on Twitter I have no idea.

Also check out my mini-series I’m calling Tidbits of Code and Node on the Raw Tech blog on Channel 9 where I’ve been talking a lot about Code (and Node) and plan to do even more now that the dial for its awesome factor was turned up a couple of notches.

Happy coding in Code!

Moving Desktop and Downloads to OneDrive

The fierce competition between online storage providers has led to where we are today. Today a consumer can store their entire life in the cloud. Okay, “their entire life” might be an exaggeration, but you know what I mean.

Do you have multiple terabytes of family photos, videos, and email archives? Yeah, me too. How would you feel if you lost them? Pretty rotten. Me too.

Maybe you already know the concepts behind basic system backups, but I’ll share some things here for context.

You can protect against a hard drive crash by backing up your valuables to a separate drive. That will dramatically reduce your chance of failure from a faulty drive, a lost laptop, an accidental format, or someone hacking into your system and deleting everything. It won’t, however, protect you from a fire, natural disaster, or perhaps a burglary. In that case, you need an offsite backup.

An offsite backup can be a little hard drive that you drop everything on and hand to a friend. And not that sketchy friend… someone you trust.

Unless your friend lives in another state, however, even this measure isn’t going to protect you from what I like to call the meteorite scenario. The meteorite in my scenario could be replaced by an earthquake, tornado, flood, or whatever else mom nature likes to bring your way wherever you live. Not that nature likes to bring meteorites my way here in Seattle. In fact, I’ve never seen a meteorite enter the atmosphere over the Emerald City, but I digress. To protect against the meteorite scenario, you need cloud backup.

Cloud backup is a bit more than just a fancy offsite backup. When you get your files to the cloud, you’re counting on a professional and commoditized backup strategy. You’re counting on the fact that your cloud provider assumes an extremely high potential value on the documents in question and creates multiple backup copies themselves to assure they don’t end up with a huge number of extremely dissatisfied customers.

It’s not without risk. It’s entire possible that any of the major cloud providers could completely goof up and lose your files. It’s a remote possibility, but the risk is there.

So in my opinion, the ultimate setup is as follows.

You keep all of your documents in your cloud storage provider of choice. I like OneDrive.

You sync some of your documents on each of your devices, but you sync all of your documents on one device with a hard drive that can handle it. You be sure that one master device - perhaps a desktop PC at home in your closet - is always on and thus syncing changes down from the cloud as you modify things from your various devices.

With that configuration, you have all of your documents locally and in the cloud. It’s all live too and doesn’t require an intermittent backup strategy.

Now more to the point.

If you’re running Windows, you have the concept of the system folders. These are the folders like Documents, Pictures, Videos, Desktop, and Downloads. They’re special folders in that they are not just a file container, but they’re also a concept. You want to store files in your Documents folder, but you may want to configure what that folder is called or where it is, and you want applications to not have to worry about where it is.

It’s similar to what happened to the paradigm of printers. A long time ago in this very galaxy, applications used to be responsible for printing. If you were an application developer, you had to create printer drivers. Now you just sort of toss your document over the wall to the operating system and say “I want to print to that one” and the common printer drivers are invoked.

It’s like that with the Documents system folder. An application might default to saving in your Documents folder regardless of where you the user have deemed that folder to live.

Perhaps you know already how to configure your Documents folder location. If not, here’s how…

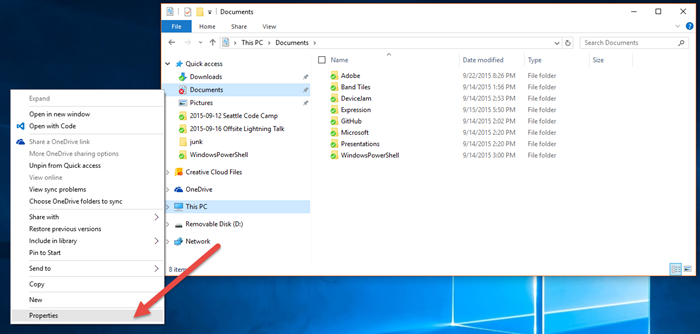

So you just right click on the Documents folder in Windows Explorer (here I’m finding it in my Quick Access list on the left) and choose Properties.

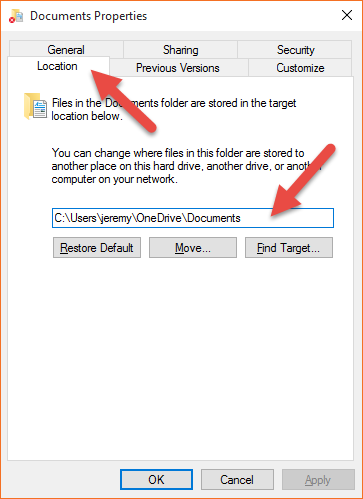

Then you go to the Location tab and change the path. To move your documents to the cloud you simply configure a path that is somewhere in your OneDrive folder (by default in c:\users{user}\OneDrive).

The result of this change is that now when most any application on your computer saves a document - say a letter to grandma, your tax returns, or whatever - it puts it in the Documents folder (the concept) which you have configured to be in your OneDrive. So that tax return is going to be synced to the cloud and all of your other devices.

Now even more to the point.

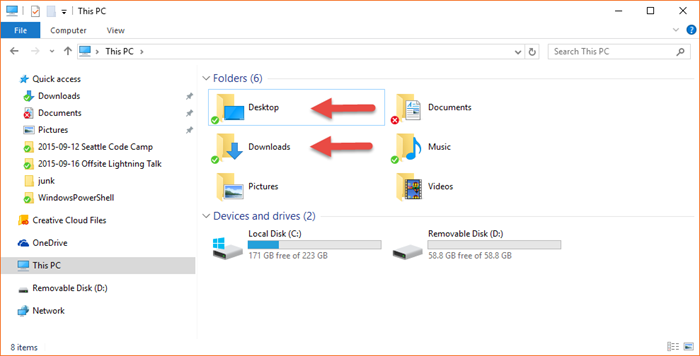

Many people don’t know that you can do the same thing with your all of your system folders including your Desktop and your Downloads folders.

It goes like this.

You may not yet have a folder in your OneDrive for Desktop and Downloads, so create them. Don’t put them inside your Documents folder. Just put them right on the root of your OneDrive file store. I did this on onedrive.com in my browser. Whenever I want to explicitly do something to my OneDrive files on the server I do it in the browser and essentially bypass the sync tool built into Windows.

Now, just like you did for your Documents folder, you go to Properties and then the Location tab and you change the path to your newly created folders in OneDrive. Mine are…

- c:\Users\jeremy\OneDrive\Desktop

- c:\Users\jeremy\OneDrive\Downloads

And that will do it.

Now when you download a file from internet using Microsoft Edge and it defaults to the Downloads folder and then you have to reload your machine, you don’t have to think about what’s in your Downloads folder and whether you want to back it up. It’s already done. And if you’re the type to save the really important tasks that you want to be front-of-mind to your desktop it’s the same thing. By the way, you know that guy at work with a thousand icons on his desktop? Don’t be that guy. :)

The whole goal here is to cloud enable our devices - making them as stateless as possible. You know you’re cloud enabled when a complete OS reload takes 30 minutes and you’re done.

There you go. Happy downloading.

Fast Refresh... Nugget!

Hey, Metro developers, if you’re using HTML/JS to build your apps, I have a little golden nugget for you. Actually, it’s possible you already know and I’m the one that’s late to the game. Anyway, have you ever made a little tiny tweak to your HTML structure, a slight mod to your CSS style, or a little enhancement to your JavaScript and wanted to see the change effect immediately instead of relaunching your app each time? It’s a valid desire. My last job was website development and so I’m used to the whole save, switch, refresh workflow.

If you’re working without the debugger attached, then you know it’s not that difficult, you just hit CTRL + F5 again.

If you are working with the debugger attached, however, you might think you have to stop (SHIFT + F5) and then start again (F5). Actually, though, all you have to do is hit F4 (Fast Refresh is how we call that)! Now that’s handy!

Metro on an Alternate Monitor?!

You know those sonic noise guns that you can shoot at people and they supposedly just stop in their tracks because it’s physically disorienting? I felt like I got hit with one when I ran across this little nugget.

Want to move your Metro experience from your primary monitor to another screen - say your second (or fourth) monitor or even to the projector for a show? I’ve been told that the start menu appears on the primary monitor (without resorting to the simulator). Period. But that’s not the case! look up thank God

If you press WIN + PGUP/PGDN, you can change which monitor the start menu and all Metro apps appears on. Now that’s awesome! Until now, I was resorting to the simulator for getting my icons on my second monitor or projector to show people. News must spread. Tell everyone.