Posts tagged with "javascript"

Beautiful Cascading Node Config

FYI, this is a repost. I lost this post’s source markdown and just recreated it for posterity.

I just learned something, which instantly makes it a good day.

I’ve been on the lookout for a really good pattern in Node.js projects to allow a user to…

Define configuration variables in a JSON config file (not flat environment variables!)

Allow them to override the configuration variables with command line arguments

Make the command line arguments work like any good, modern CLI with double dash full names (i.e.

--foo bar) or single dash aliases (i.e.-f bar)Let the dev know if their code isn’t working because a certain configuration variable hasn’t been set

Here’s what I have now.

let config = require('./arguments.json'); |

So, the arguments.json file that we bring in is a place we can define arguments permanently so they don’t have to be included on the command line.

Then we pull in a dependency on the command-line-args package. I was delighted to discover that this package works exactly like I expected it too. For each argument, we can give it a long name (i.e. argumentA) and a short name (i.e. a). Finally, I added the required property myself, which I’ll show you in a second.

Next, I coerce these two sources of configuration values using a spread operator. I talked a lot more about spread operators in my Level Up Your JavaScript Game! - Other ES6 Language Features post. The order of these two spread objects is such that it will take the values in my file first, but then override them with command line arguments if they exist.

Finally, I added another little trick that wasn’t built in to the command-line-args package (although I think it should be). I added the ability to make certain arguments required, and if not to throw an error so the user knows exactly why things don’t work.

That’s all!

Level Up Your JavaScript Game! - Other ES6 Language Features

See Level Up Your JavaScript Game! for related content.

Sometimes it takes a while to learn new language features, because many are semantic improvements that aren’t absolutely necessary to get work done. Learning new features right away though is a great way to get ahead. Putting off learning new features leaves you lagging the crowd and constantly feeling like you’re catching up. I’ve noticed that junior developers often know more modern language features than senior developers.

There are quite a few language features that were introduced in ES5 and ES6, and you’d be well off to learn them all! Certainly, though, look into at least the ones I’m going to talk about here. I recommend you learn…

…to effectively use the object and array spread operators.

From MDN: “Spread syntax allows an iterable such as an array expression or string to be expanded in places where zero or more arguments (for function calls) or elements (for array literals) are expected, or an object expression to be expanded in places where zero or more key-value pairs (for object literals) are expected.”

The spread operator is an ellipsis (

...), but don’t confuse it with the pre-existing rest operator (also an ellipsis). The rest operator is used in the argument list of a function definition. The spread operator on the other hand is used… well, I’ll show you.

Think of the spread operator’s function as breaking the elements of an array (or the properties of an object) out into a comma delimited list. So [1,2,3] becomes 1,2,3. The array spread operator is most helpful for either passing elements to a function call as arguments or constructing a new array. The object spread operator is most helpful for constructing or merging objects properties.

If you have an array of values, you can pass them to a function call as separate arguments like this…

myFunction(...[1,2,3]); |

If you have two objects - A and B - and you want C to be a superset of the properties on A and B you do this…

let C = {...A, ...B}; |

Therefore…

{...{"name":"Sally"},...{"age":10}} |

…to get into the habit of using destructuring where appropriate.

Destructuring looks like magic when you first see it. It’s not just a gimmick, though. It’s quite useful.

Destructuring allows you to assign variables (on the left hand side of the assignment operator (=)) using an object or array pattern. The assignment will use the pattern you provide to extract values out of an object or array and put them where you want them.

let {name,age} = {name:"Sally",age:10}; |

That’s a lot better than the alternative…

let person = {name:"Sally",age:10}; |

It works with nested properties too…

let {name,address.zip:zip} = {name:"Sally",age:10,address:{city:"Seattle",zip:12345}}; |

It works with arrays too…

let [first,,third] = ["apple","orange","banana","kiwi"] |

Destructuring is handy when you’ve fetched an object or array and need to use a subset of it’s properties or elements. If your webservice call returns a huge object, destructuring will help you pull out just the parts you actually care about.

Destructuring is also handy when creating mixins - objects that you wish to sprinkle functionality into by adding certain properties or functions.

Destructuring is also handy when you’re manipulating array elements.

…to use template literals in most of your string compositions.

I recommend you get in the habit of defining string literals with the backtick (`) operator. These strings are called template literals and they do some great things for us.

First, they allow us to line wrap our string literal without using any extra operators. So as opposed to the existing method…

let pet = "{" + |

…we can use…

let pet = `{ |

Elegant!

…to understand the nuances of lambda (=>) functions (aka fat-arrow functions).

And it looks like I’ve saved one of the best for last, because lambdas have so dramatically increased code concision. Not to overstate it, but lambda functions delight me.

I was introduced to lambda functions in C#. I distinctly remember one day in particular asking a fellow developer to explain what they are and when you would use one. I distinctly remember not getting it. Man, I’ve written a lot of lambda functions since then!

The main offering of the lambda is, in my opinion, the concision. Concise code is readible code, grokkable code, maintainable code.

They don’t replace standard functions or class methods, but they mostly replace anonymous functions in case you’re familiar with those. I very rarely use anonymous functions anymore. They’re great for those functions you end up passing around in JavaScript, because… well, JavaScript. You use them in scenarios like passing a callback to an asynchronous function.

Allow me to demonstrate how much more concise a lambda function is.

Here’s a call to that readFile function we were using in an earlier post. This code uses a pattern where functions are explicitly defined before being passed as callbacks. This is the most verbose pattern.

fs.readFile('myfile.txt', readFileCallback); |

Now let’s convert that function an anonymous function to save some lines of code. This is recommended unless of course you’re paid by the line of code.

fs.readFile('myfile.txt', function(contents) { |

Notice that the function name went away. I for one strongly dislike the first pattern. When a callback function is only used once, I feel like it belongs inline with the function call. If of course, you’re reusing a function for a callback then that’s a different story.

Now let’s go big! Or small, rather. Let’s turn our anonymous function into a lambda.

fs.readFile('myfile.txt', txt => { |

I love it! Notice, we were able to do away with the function keyword altogether and we specified it’s argument list (in this case only a single argument) on its own. Notice too that I called that argument txt. I could have, of course, kept the name contents, but I tend to use short (often only a single letter) arguments in lambda functions to amplify the brevity. Lambda functions are very rarely complex, so this works out well.

The loss of the function name and keyword saved some characters, but lambda functions get even shorter. If a lambda contains only a single expression, the curly braces can be dropped. The expression in this case becomes the return value of the lambda.

To illustrate, let me use a new example - this one from my post on arrays in this series…

let numbers = [1,2,3,4,5,6]; |

In this example, n => n <= 3 is a complete lambda function. I know, concise right?! This example illustrates the value of the single letter arguments and also introduces you to the expression syntax. The body of the lambda is n <= 3. That’s an expression. It’s not a statement such as…

let n = 3; |

And it’s not a block of statements such as…

{ |

…and like I said, when the body of your lambda is a simple express, you can drop the curly braces and the expression becomes your return value.

So in the example, the .filter() function wants a function which evaluates to true or false. Our expression n <= 3 does just that, and returns the result.

There are two caveats that I’ll draw out.

First, if you have 1 argument in your lambda function, you do not need parenthesis around the argument list. In our previous example, n => n <= 3 is a good example of that. If you have 0 arguments or more than 1 argument, however, you do. These are all valid…

() => console.log('go!') //0 arguments |

If you use TypeScript, you may notice that the presence of a type on a single argument lambda function requires you to wrap it with parenthesis as well, such as

(x:number) => x * x.

The second caveat is when your lambda returns an expression, but that expression is an object literal wrapped in curly braces ({}). In this case, the compiler confuses your intention to return an object with an intention to create a statement block.

This, then, is not valid…

let generatePerson = (first,last) => {name:`${first} ${last}`} |

To direct the compiler just do what you always did in complex mathematical statements in high school - add some more parenthesis! We could correct this as so…

let generatePerson = (first,last) => ({name:`${first} ${last}`}) |

And there’s one more thing about lambdas that you should know. Lambdas have a feature to remediate a common problem in JavaScript anonymous functions - the dreaded this assignment.

Anonymous functions (and named functions) in JavaScript are Objects, and as such they have a this operator that references them. Lambda functions do not. If you use this in a lambda function, chances are the sun will keep shining and the object you intended to reference will be referenced. No more _this = this or that = this or whatever else you used to use everywhere.

That’ll do it for arrays, and in fact that’ll do it for this series. If you jumped here from a search, headback to Level Up Your JavaScript Game! to see the rest of the content.

Thanks for reading and happy hacking!

Level Up Your JavaScript Game! - ES6 Modules

This post is not yet finished

See Level Up Your JavaScript Game! for related content.

Unfortunately, the whole concept of modules in JavaScript has undergone a ton of evolution and competing standards, and for a while it seems like no two JavaScript environments used modules the same way. Spending a little time figuring out exactly what’s happening goes a long way toward demystifying things.

To level up in JavaScript modules, I recommend you learn…

…to transition from Node.js’s CommonJS modules to ES6 modules.

Node has not yet fully adopted ES6 modules, but it’s coming soon. We developers can today though using a transpiler, and I recommend it. We may as well get into tomorrow’s habits today. Instead of…

const myLib = require('myLib'); |

…use…

import { myLib } from 'myLib'; |

The former strategy - CommonJS - is a well-established habit for most of us, but it’s not inherantly as capable as the latter - ES6 modules. I’m going to assume you’ve used the CommonJS pattern plenty and skip explaining its nuances, and talk only about the newer, better, faster, stronger ES6 modules.

To play with some the concepts on this page, install TypeScript.

npm i -g typescript |

…to define an ES6 module and export all or part of it.

CommonJS modules are defined largely by putting some JavaScript in a separate file and then requiring it. ES6 modules are too. The differences come in how a module describes what it exports - that is what it makes available to anyone who decides to depend on it.

In ES6 modules, you put export on anything you want to export. Period. That’s easy :)

//mymodule.ts |

In the above example, only y would be available to whoever takes a dependency on mymodule.

You can put export on variable declarations (like the let above), classes, functions, interfaces (in TypeScript), and more. Read on to see how these various exports get imported.

…to import an entire module.

To import everything a given module has to offer - all of the exports…

import * as mymodule from './mymodule'; |

The * indicates that we want everything and the as mymodule aliases (or namespaces) everything as mymodule. After this import, we would be free to use mymodule.y in our calling code.

…to import parts of a module.

Let’s say our module looked like this…

//mymodule.ts |

If we decide in our calling code that we need x and we need the sum function, then we can use…

import { x, sum } from './mymodule' |

Notice that we don’t need to prefix the x and sum functions. They’re in our namespace.

…to alias modules on import.

Sometimes, you want to change the name of something you import - for instance, to avoid a naming conflict…

import { x, sum as add } from './mymodule' |

That’ll do it for ES6 module imports. Now head back to Level Up Your JavaScript Game! or move on to my final topic on ES6 features.

Level Up Your JavaScript Game! - Regular Expressions

See Level Up Your JavaScript Game! for related content.

I’m sorry, but there’s no way around it. You have to master regular expressions.

Regular expressions (regex for short) have a reputation of being very difficult, but if you happen to be an entry-level developer, I really don’t want you to be intimidated by them. They’re actually not so difficult. They only look difficult once you’ve created one. In a sense, they’re an easy way to at least look like a ninja.

JavaScript’s implementation of regular expressions was tough for me at first because there are a few different ways to go about it. Spend some time writing and calling a couple of patterns though, and you’ll quickly master it.

To level up in JavaScript regular expressions, I recommend you learn…

…to write your regular expression.

That’s right, first you have to learn how to write a good regex pattern. I’m not going to go into detail, but if you want some help you’re a quick web search away. I highly recommend regexr.com. It’s good not only for learning the patterns, but testing them too.

In learning patterns, you should learn about capture groups too. Defining capture groups is simple - you just put parenthesis around certain parts of your pattern. Those parts of the pattern will then be available in your matchs as independent values.

Let’s say you wanted to pull the area code out of a phone number pattern. You could use a pattern like (\d{3})-\d{3}-\d{4}. That’s obviously a very simplistic pattern that would only match US-style, 10-digit phone numbers with dashes between the groups, but notice the parenthesis around the first group. That means that that part - the area code - is going to be made available as a value for you after you execute the regex.

…to quickly tell if a pattern is detected in some text.

If you don’t need the actual matchs of the regex execution, but just want to see if there’s a match, you use <pattern>.test(<text>). For example…

/\d{3}-\d{3}-\d{4}/.test('555-123-4567') //true |

In JavaScript, you put regular expressions between slashes (

/) just like you put strings between quotes.

…would return true.

…to use .exec() for single pattern matches with capture groups.

If you need not only to know that the pattern matched, but also to get values from the match such as the match itself and all of the capture group values, then you use .exec()…

let match = /(\d{3})-\d{3}-\d{4}/.exec('555-123-4567'); |

…and because I added parenthesis around the first number group there, that value should be returned as part of the match. The match itself is always the first match ([0]), and each subsequent capture group in the order you defined them from left to right follow ([1], [2], …, [n]).

…to use .match() to find multiple matches in a string.

The .match() function is on String.prototype, so it’s available on any string. Besides flipping the calling pattern from .exec() (.exec() uses <pattern>.exec(<text>) while .match() uses <text>.match(<pattern>)), this function has a couple of other peculiarities.

First, it does not capture from your capture groups, so if that’s what you’re looking to do, then use .exec().

Second, it is capable of capturing multiple matches returned as an array. So if you do something like…

"14 - 8 = 6".match(/\d+/g) //[14,8,6] |

The g stands for global and is a regex option that tells it to look in the entire string. Look at all of the other options that are valid there too. They can be helpful.

If you need to capture multiple matches (like you get with .match()), but you also want the capture groups (like you get with .exec()), then you need to call .exec() in a loop like this…

let text = "The quick brown fox jumps over the lazy dog."; |

Note that I included an i and a g option on the regex (/the/). The i makes the search case insensitive and the g directs it to find every match in the text. Notice that match[0] equals the full match each iteration and match[1] is the contents of the capture group I defined (the first letter of the word “the” for whatever reason).

That’ll do it for regular expressions. Now head back to Level Up Your JavaScript Game! or move on to the next topic on ES6 module imports.

Level Up Your JavaScript Game! - Arrays

See Level Up Your JavaScript Game! for related content.

Working with JavaScript arrays is practically an everyday task.

Arrays are simply collections of things, and we often find need to perform some function to each of their items or perhaps to subsets of their items.

Years ago, ES5 introduced a bunch of new array functions that you should be or become familiar with. The three I’ll highlight are filter, map, and reduce.

To level up in JavaScript arrays, I recommend you learn…

…to use the .filter() function to reduce an array down to a subset.

This is not a difficult topic, but it’s an important one. If you have a set of numbers [1,2,3,4,5,6] and you’d like to limit it to numbers less than or equal to 3, you would do…

let numbers = [1,2,3,4,5,6]; |

Take note of what the fact that .filter() hangs off of an array. It is in fact a function on Array.prototype and is thus available from every array. So [].filter is valid.

.filter() asks for a function with a single argument that represents a single item in the array. The .filter() function is going to execute the function you give it on each and every item in the array. If your function returns true, then it’s going to include that item in the resulting set. Otherwise it won’t. In the end, you’ll have a subset of the array you called .filter() on.

This brings up something I see a lot in folks that have been programming a while.

Imagine this common pattern…

let people = [ |

What’s wrong with that code? Well, it works, so there’s nothing functionally wrong with it. It’s too verbose though. If we use some array functions, we could drastically increase the readibility and maintainability. Let’s try…

people.forEach(p => { |

Here, we replaced the for loop with a forEach array function that we hang right on our array. This allows us to refer, inside our loop, to simply p instead of people[i]. I love this. I find for loops difficult and unnatural to write.

Some argue against using single-letter variables like

pand would prefer to call that something likeperson. Do what makes you happy and works well with your team, but I like single-letter variables inside of fat-arrow functions where concision is king.

Lets do another round…

people |

Here, we pulled the if statement out of our loop and added it as a .filter() function before our .forEach() function in a chain of array functions. This effectively separates the logic we use for filtering with the logic we which to take effect on our subset of people - a very good idea.

I might even take the separation of .filter() a step further and do…

people |

To me, that’s more clear.

…to use the .map() function to transform elements in an array.

Think of arrays, for a second, like you do database tables. An array entry is analogous to a database table’s row, and an array property is analogous to a database table’s column.

In this analogy, the .filter() function reduces the rows, and the .map() function which I’d like to talk about now reduces (potentially) the columns - more generally, it transforms the element.

That transformation is entirely up to you and it can be severe. You might do something simple like pull a person’s name property out because it’s the only one you’re concerned with. You might just as well do something more complex like transform each person to a web service call and the resulting promise. Let’s try that with our previous code…

let orderPromises = people |

Notice that now, each of the females under 40 is fetched from a webservice. The fetch() function returns a promise, so each array item is transformed from a person object to a promise. After the run, orderPromises is an array of promises. By the way, you could then execute code after all orders have been retrieved, using…

let ordersByPerson = await Promise.all(orderPromises); |

…to use reduce to turn an array into some scalar value.

If you really want to be a JavaScript ninja, don’t miss the .reduce() array function and it’s zillion practical uses!

As opposed to .map() which acts on each element in an array and results in a new array, .reduce() acts on each element in an array and results in a scalar object by accumulating a result with each step.

For example, if you have an array of orders and you want to calculate sales tax on each order based on total and location, you would use .map() to turn arrayOfOrders into arrayOfOrdersWithSalesTax (start with an array and end with an array).

let arrayOfOrdersWithSalesTax = arrayOfOrders |

The

.map()function in the preceding example uses an object spread operator (…) to tack another property onto each order item. You can read more about the spread operator in my Level Up Your JavaScript Game! - ES6 Features post.

If, however, you wanted to calculate the total sales tax for all orders, you would use .reduce() to turn arrayOfOrders into totalSalesTax (start with an array and end with a scalar).

let totalSalesTax = arrayOfOrdersWithSalesTax |

It’s not immediately apparent how that reduce function works, so let me walk you through it.

The .reduce() function asks for a function with 2 arguments - an accumulator which I’m calling a and a current which I’m calling o because I know that my current item on each loop is actually an order. This makes it clear to me in my function that o means order. Finally, the reduce function itself takes a second argument - the initial state. In my sample, I’m using 0. Before we’ve added up any sales tax, our total sales tax should be 0, right?

The function you pass in to .reduce() then executes for each item in the array and by our definition, it calculates the sales tax and adds (or accumulates) the result to the a object. When the .reduce() function has completed its course, it returns the value of a, and my code saves that in a new local variable calle3d totalSalesTax.

Pretty cool, eh?

Let me be clear that I said that .reduce() turns an array into a scalar, but that scalar can most anything you want including a new array.

That’ll do it for arrays. Now head back to Level Up Your JavaScript Game! or move on to the next topic on regular expressions.

Level Up Your JavaScript Game! - Asynchrony

See Level Up Your JavaScript Game! for related content.

Most any JavaScript application you touch now uses asynchrony, so it’s a critical concept although it’s not a simple one.

I usually start any discussion on asynchrony by clarifying the difference between asynchrony and concurrency. Concurrency is branching tasks out to separate threads. That’s not what we’re talking about here. We’re talking here about asynchrony which is using a single thread more efficiently by basically using the gaps where we were otherwise frozen waiting for a long process.

One of the tough things about asynchrony in JavaScript is all the options that have emerged over time. Options are a double-edged sword. It’s both good and bad to have 20 different ways to accomplish a task.

To level up in JavaScript asynchrony, I recommend you learn…

…to call a function that returns a promise.

This is the most basic thing to understand about promises. How to call a function that returns one and determine what happens when the promise resolves.

To review, calling a regular (synchronous) function goes…

let x = f(); |

And the problem is that if f takes a while, then the thread is blocked and you don’t get to be more efficient and do work in the meantime.

The solution is returning from f with a “place holder” - called a Promise - immediately and then “resolving” it when the work is done (or “rejecting” it if there’s an exception). Here’s what that looks like…

let x = f().then(() => { |

One more thing. When a promise is resolved, it can contain a payload, and in your .then() function you can simply define an argument list in your handler function to get that payload…

let x = f().then(payload => { |

Luckily, a lot of functions already return promises. If you want to read a file using the fs module in Node, for instance, you call fs.readFile() and what you get back is a promise. Again, it’s the simplest case for asynchrony, and here’s what that would look like…

const fs = require('fs'); |

…to write a function that passes on a promise.

If the simplest case for asynchrony is calling functions that return promises, then the next step is defining your own function which passes a promise on. Recall the example I used where we wanted to use fs.readFile. Well, what if we wanted to refactor our code and put that function call into our own function.

It’s important to realize that it’s rarely sensible to create a sychronous function which itself calls an asychronous function. If your function needs to do something internally that is asynchronous, then you very likely want to make your function itself asynchronous. How? By passing on a promise.

Let’s write that function for reading a file…

getFileText('myfile.txt').then(file => { |

Easy, eh? If fs.readFile returns a promise, then we can return that promise to our caller. By definition, if our function returns a promise, then it’s an asynchronous function.

…to write a function that creates and returns a promise.

But what if you want to create an asynchronous function that itself doesn’t necessarily call a function that returns a promise? That’s where we need to create a new promise from scratch.

As an example, let’s look at how we would use setTimeout to wait for 5 seconds and then return a promise. The setTimeout function in JavaScript (both in the browser and in Node) is indeed asynchronous, but it does not return a promise. Instead it takes a callback. This is an extremely common pattern in JavaScript. If you have a function that needs to call another function that wants a callback, then you need to either keep with the callback pattern (no thank you) or essentially transform that callback pattern into a promise pattern. Let’s go…

waitFive().then(() => { |

See how the first statement in the waitFive function is a return. That lets you know that function is going to come back with an answer immediately. Within the new Promise() call we pass in a handler - a function that takes 2 arguments: resolve and reject. In the body of our handler, resolve and reject are not static variables - they’re functions, and we call them when we’re done, either because things went well or they didn’t. It’s just super neat that we’re able to call them from inside of a callback. This is possible due to the near magic of JavaScript closure.

…to chain promises and catch exceptions.

You should be sure you understand how promise chaining is done. Chaining is a huge advantage to the promise pattern and it’s great for orchestrating global timing concerns in your application - i.e. first I want this to happen and then this and then this.

Here’s what a chain looks like…

f() |

…where each of those handlers that we’re passing to the .then() functions can have payloads.

There’s some wizardry that the .then() function will do for us as well. It will coerce the return value of each handler function so that it returns a promise every time! Watch this…

f() |

Pay close attention to what’s happening here. The first .then() is returning a string, but we’re able to hang another .then() off of it. Why? Because .then() coerced "foo" into a promise with a payload of "foo". This is the special sauce that allows us to chain.

There’s a shortcoming with promises here by the way. Let me set it up…

f() |

The unfortunate remedy to this problem is…

let v1; |

That’s a bit hacky, but it’s a problem that’s solved very elegantly by async/await coming up.

…to save a promise so you can check with it at any point and see if it’s been resolved.

This is great for coordinating timing in a complex application.

This is a little trick that I use quite a bit, though I don’t think it’s very common. It’s quite cool though and I don’t see any drawbacks.

let ready = f(); |

What I’m doing is saving the result of my function call to a variable and then calling .then() on it any time I want throughout my codebase.

You might wonder why this is necessary. Wouldn’t the first call be the only one that needs to “wait” for the promise? Actually, no. If you’re creating code that must not run until f() is done, then you need to wait for it. It’s very likely that subsequent references to the promise happen when the promise is already resolved, but that’s fine - your handler code will simply run immediately. This just assures that that thing (f() in this case) has been done first.

…to write an asynchronous function using async instead of creating a promise and calling it using await instead of .then().

The async/await pattern is one that some clever folks at Microsoft came up with some years ago in C#. It was and is so great, that it’s made its way into other languages like JavaScript. It’s a standard feature in the most recent versions of Node.js, so it’s ready for you out of the box.

In JavaScript, async and await still use promises. They just make it feel good.

For defining the asynchronous function, instead of…

function f() { |

…you do…

async function f() { |

And the angels rejoice! That’s way more understandable code.

Likewise, on the calling side, instead of…

f().then(result => { |

…you do…

let result = await f(); |

Yay! How great is that.

It seems odd at first, but the statements that come after the line with await do not execute until after f() comes back with its answer. I like to mentally envision those statements as being inside of a callback or a .then() so I understand what’s happening.

As I eluded to before, this solves that nasty little problem we had with the promise calling pattern…

let value1 = await f1(); |

Notice that I was a bit more verbose in that I defined f2. I didn’t have to, but the code is far more readable and more importantly, value1 is available not only inside of f2, but also between the function calls and after both.

Very cool.

…to understand the difference between each of the following lines of code.

let x = f; |

The differences may not be obvious at first.

The first line makes x to be the asynchonous function that f is. After the first line executes, you would be able to call x().

The second executes f() and sets y equal to the resulting promise. After the second line executes, you would be able to use y.then() or await y to do something after f() resolves.

The third executes f() and sets z equal to the payload of the promise returned by f().

Let me finally add one random tidbit, and that is that you should understand that the async operator can be added to a fat arrow function just as well as a normal function. So you may write something like…

setTimeout(async () => { |

You can’t use await except inside of a function marked with async.

If you find yourself trying to call await but you’re not in an async function, you could do something like this…

(async () => { |

That simply declares and invokes a function that’s marked as async. It’s a bit odd, but it works a treat.

That’ll do it for asynchrony. Now head back to Level Up Your JavaScript Game! or move on to the next topic on arrays.

Level Up Your JavaScript Game!

A fellow developer recently expressed a sentiment I’ve heard and felt many times myself.

“There are a lot of JavaScript concepts I know, but I don’t think I could code them live in front of you right now.”

It’s one thing to understand the concept of a Promise or destructuring in JavaScript, but it’s quite another to be able to pull the code out of your shiver without a web search or a copy/paste.

There are so many concepts like this for me as a developer. They’re my gaps - the pieces I know are missing. I know they won’t take long to fill, but it’s just a matter of finding and making the time. My strategy is to…

Record them

As I become aware of these gaps, I write them on my task list. I may not get to them right away, and that’s fine. When I have a spare hour though, I turn to these items in my task list and then off I go, learning something new.Write into permanent memory storage

Computers can save things permanently with a single write. For me, it takes 4 or 5 writes. For example, a long time ago, I wanted to learn how to write a super basic web server in Node.js - from memory. So I looked it up and found something like this…var html = require('http');

html.createServer((req,res) => {

res.end('hi')

}).listen(3000)I found it, tried to memorize it, tried to write it from memory, failed, looked it up, and tried again as many times as it took until I could. Now I have it. I can whip it up in a hurry if I’m trying to show basic Node concepts to someone.

In counseling my friend on what JavaScript concepts would be beneficial to practice, I decided to compose this rollup blog post called Level Up Your JavaScript Game! to share more broadly.

There are 5 things I recommend you not only grok generally, but know deeply and can whip up on request…

The World's Quickest API

Sometimes you just need a quick API. Am I right?

I was working on a project recently and needed just that. I needed an API, and I didn’t want to spend a lot of time on it.

One of my strategies for doing this in days of old was to write up some code-first C# entities, reverse engineer the code to create an Entity Framework model, and serve it using OData. It was great and all that stuff is still around… still supported… still getting improved and released, so you could go that way, but that’s not how I made my last “instant API”.

My last one was even easier.

I found a node package called json-server that takes a JSON file and turns it into an API. Done. Period. End of story. A few minutes composing a JSON file if you don’t have one already and then a few lines of code to turn it into an API.

I also often use a node package called localtunnel that opens a local port up to the internet. Now I spend a few minutes writing a JSON file and 20 seconds opening a port and I have myself an API that I can share with the world.

For example. Let’s say I want to write an app for dog walkers.

Here’s some dog data…

{ |

Now let’s turn that into an API stat! I’m going to be thorough with my instructions in case you are new to things like this.

I’ll assume you have Node.js installed.

Create yourself a new folder, navigate to it, and run npm init -y. That creates you a package.json file. Then run touch index.js to create a file to start writing code in.

Now install json-server by running npm i json-server

The

iis short forinstall. As of npm version 5, the--saveargument is not necessary to add this new dependency to thepackage.jsonfile. That happens by default.

Finally, launch that project in your IDE of choice. Mine is VS Code, so I would launch this new project by running code .

Edit the index.js file and add the following code…

const jsonServer = require('json-server') |

Let me describe what’s going on in those few lines of code.

The first line brings in our json-server package.

The second line creates a new server much like you would do if you were using Express.

Lines 3 and 4 inject some middleware, and the rest spins up the server on port 1337.

Note that line 4 points to data.json. This is where your data goes. You can make this simpler by simply specifying a JavaScript object there like this…

server.use(jsonServer.router({dogs: {name"Rover"}})) |

But I discovered that if you use this method, then the data is simply kept in memory and changes are not persisted to a file. If you specify a JSON file, then that file is actually updated with changes and persisted for subsequent runs of the process.

So that’s pretty much all there is to it. You run that using node . and you get a note that the API is running on 1337. Then you can use CURL or Postman or simply your browser to start requesting data with REST calls.

Use http://localhost:1337/dogs to get a list of all dogs.

Use http://localhost:1337/dogs/1 to fetch just the first dog.

Or to create a new dog, use CURL with something like curl localhost:1337/dogs -X POST -d '{ "id":4, "name":"Bob", ...}

Now you have a new API running on localhost, but what if you want to tell the world about it. Or what if you are working on a project with a few developer friends and you want them to have access. You could push your project to the cloud and then point them there, but even easier is to just point them to your machine using a tunneler like ngrok or Local Tunnel. I usually use the latter just because it’s free and easy.

To install Local Tunnel, run npm i -g localtunnel.

To open up port 1337 to the world use lt -p 1337 -s dogsapi and then point your developer friend that’s working on the UI to fetch dogs using http://dogsapi.localtunnel.me/dogs.

Be kind though. You set your API up in about 4 minutes and your UI dev probably hasn’t gotten XCode running yet. :)

Deploying TypeScript Projects to Azure from GitHub Using Continuous Deployment

I’m working on a fun project called Waterbug. You can peek or play at github.com/codefoster/waterbug.

Waterbug is an app that collects data as you row on a WaterRower and visualizes it in an Angular 2.0 app.

It’s a fun app because it uses a lot of modern stuff. Modern stuff is usually the fun stuff, and that’s why it’s always nice to be working on a greenfield project.

So, like I mentioned, one of the components of this app uses Angular 2.0. Angular is itself written in TypeScript, and you’re strongly encouraged to write your Angular 2.0 apps using TypeScript. You don’t have to, but at least in my opinion, you’d be crazy not to.

TypeScript is awesome.

TypeScript makes everything more terse, more elegant, and easier to read, and it allows your tooling (Visual Studio Code is my editor of choice) to reason about your code and thus help you out immensely.

The important thing to remember about TypeScript and the reason I think for it’s rapid uptake is that it’s not a different language that compiles to JavaScript. It’s a superset of JavaScript. That means you don’t throw any of your existing work away. You just start sprinkling in TypeScript where it benefits you. If you’re like me though, it won’t be long before you’re addicted to using it everywhere.

When you’re working on a TypeScript project, you write in .ts files and those get transpiled from .ts files to .js files.

Herein lies our first question.

Should we check those .js files (and also the .js.map files that are created by default) into our code repository (GitHub in my case)?

The answer is no.

The .js code is derivative and does not belong in source control. Source control is for source files. The .ts files are our source files in this case.

If you start checking your .js files into source control, you’re inevitably going to end up with .ts files and their associated .js files out of sync. Hair pulling will surely ensue.

I’ve gone one step further and determined that I don’t even want to look at my .js files in my editor.

In Visual Studio Code, I can go to File | Preferences | Workspace Settings, which opens (or creates if necessary) my projects .vscode\settings.json file. Then I can sprinkle in a little magic dust and tell Code that I’m not so concerned with .js and .js.map files and I’d just rather they not show up in my File Explorer pane or in my global search results.

Here’s the magic dust…

{ |

If, however, you don’t check your .js files into GitHub, then when you configure Azure to do continuous deployment from GitHub, it’s not going to pull in any .js files and that’s what your users’ browsers really need to make the site run.

So this is where some people say “Oh, blasted! I’ll just check my .js files in and call it done”.

True that works, but it also incurs technical debt. Don’t do it. It’s not worth it. Stick to your philosophical guns and don’t make choices like this. It may cost a little more up front to figure out the right way, but you’ll be glad later.

So, where and when should the .ts files get transpiled?

The answer is that they should get transpiled in Azure and it should happen each time there’s a deployment.

Now, let’s dig in and figure out how to do this.

If you do a little research, you’ll find that when you wire Azure up to look at GitHub, it does a pull of the code every time you push to the configured branch. Then it runs a default deployment script if you haven’t specified otherwise.

To run some code for each deployment, you simply customize this deployment script. You do that by adding two files to the root of your project: .deployment and deploy.cmd. You could just create these files manually, of course, but it’s better to generate them. That way you have the latest recommended default script and it specifically made for the type of application you’re running.

To generate the default deployment script, you first need to have the Azure Xplat CLI tool installed, which is a breeze. Just do npm install -g azure-cli. If you already have it and haven’t updated it for a while, then run npm up -g azure-cli.

After you have the azure-cli tool, you need to login to your Azure subscription. This is a lot easier than it used to be.

Simply type azure login. That will generate a little code for you and then ask you to go to a website, login, and enter your code. From that point forward, you’re able to access your Azure goodies from your command line. CLI FTW!

Once you get that, just go to the root of your website project (at the command line) and then run…

azure site deploymentscript --node |

This will create the .deployment and deploy.cmd files.

Okay, now we just have to customize the deploy.cmd file a bit.

If your deployment script looks like mine, then there’s a part that looks like this…

:: 3\. Install npm packages |

That script runs npm install to install your npm dependencies. It adds the --production flag to indicate that developer dependencies should be skipped since this is not a dev box - it’s the real deal!

Just after an npm install, you’re ready for the meat of the matter. It’s time to turn all of your .ts files into .js files.

To accomplish this, I added this just after step 3…

:: 4\. Compile TypeScript |

The first line is obviously a comment.

The echo shows what’s going on in the console so you can find it in the log files and such.

The last line calls :ExecuteCmd (which is a function that comes with the default deployment script) and asks it to run TypeScript’s commandline compiler (tsc) using node and pointing it to the deployment target. The deployment target is the /site/wwwroot directory that contains your site. The command explicitly uses the tsc command that’s in the deployment target’s node_modules\typescript\bin folder. That should be there because we have typescript defined as one of the projects dependencies in the package.json. Therefore the npm install from a few lines up should have installed typescript. Another strategy would be to install typescript globally, but I opted for this method.

And that’s really all there is to it. I like to jump over to my SCM site (

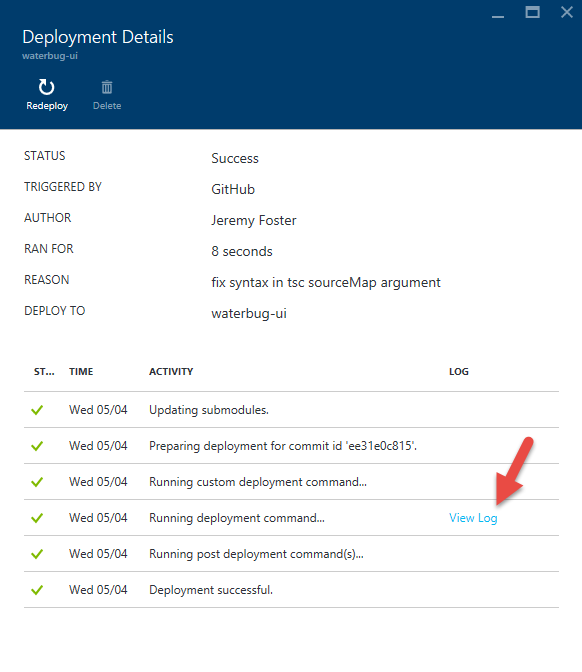

If you look in the list of deployments in your Azure portal, you can actually double-click on the latest deployment and then click on View Log to see the console output that was captured when this deployment script ran…

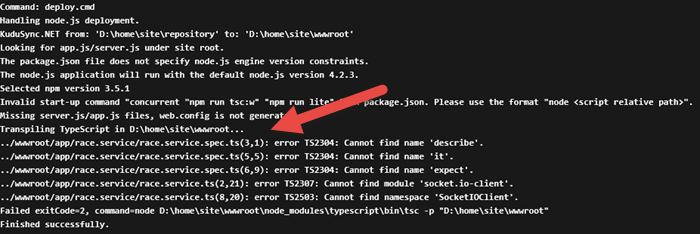

In the log, you can see our echo and that the transpilation process has occurred. Don’t worry about the errors that are thrown. Those are expected and didn’t stop the process from completing.

Building Things Using Fusion 360 and JavaScript

I like making things.

I used to mostly just make things that show up on the computer screen - software things. Lately, however, I’ve been re-inspired to make real things. Things out of wood and things out of plastic and metal and fabric and string.

The way I see it, we design things either manually or generatively.

By manual I mean that I conceive an idea then design and build it step by step. I - the human - am involved every step of the process. Imperative code is manual. Here’s some pseudocode to describe what I’m talking about…

// step 1 |

See what I mean?

I’m not arguing that this sort of code and likewise this sort of technique for building is not essential. It is. I am, however, going to propose that it’s often not altogether exciting or inspiring. The reason, IMO, is that the entire process is no greater than the individual or organization that implements it. An individual only has so many hours in the day and is even limited in ideas. An organization can grow rather large and put far more time and effort into a problem and obviously generate more extensive results. But the results are always linearly related to the effort input - not so exciting.

By generative I mean that instead of creating a thing, I create rules to make a thing. The rules may be non-deterministic and the results completely unexpected - even from one run to another. The results often end up looking very much like what we find in nature - the fractal patterns in leaves, the propagation of waves on the water, or the absolute beauty of ice crystals up close.

What’s exciting is when an individual or organization puts their time and effort into defining rules instead of defining steps. That is, after all, the way our own brains work, and in fact, that’s the way the rest of nature works too. It’s amazing and awesome and I would venture to say it’s even miraculous.

I think a lot of my ideas on the matter parallel and perhaps stem from Stephen Wolfram’s book A New Kind of Science.

Most of the book is about cellular automata. The simple way to understand these guys is to think back to Conway’s Game of Life. The game is basically a grid of cells that each have a finite number of states - often times two states: black and white. Initially, the cells in the grid are seeded with a value and then iterations are put into place that may change the state of the cells according to some rules.

The result is way more interesting than the explanation. The cell grid appears to come to life. The fascinating part is that the behavior of the system is usually not what the author intended - it’s something emergent. The creator is responsible for a) creating an initial state and b) creating some rules. The system handles the rest. It usually takes a lot of trial and error if the intention is to create something that serves some certain purpose.

Check out Wikipedia’s page on cellular automata, and specifically look at Gosper’s Glider Gun.

I don’t know about you, but I find that completely awesome.

Okay, so when are you going to get to the point of the blog post, codefoster?

Calm down. It’s called build up. :)

First, let me say that generating graphics in either 2D or 3D is nothing conceptually new. What I like about discovering and learning an API for CAD software, though, is that I can not only generate something that targets the screen, I can generate something that targets the 3D printer or the laser cutter. That’s all sorts of awesome!

The example I’m going to show you now is a simple one that I hope will just get your gears turning. You could, by the way, take that literally and generate some gears and get them turning.

If you don’t have Fusion 360, go to fusion360.autodesk.com and download it. If you’re a hobbyist, maker, student, startup type you can get it for free.

If you’re new to the program, let me suggest the learning material on their website. It’s great.

After you install Fusion 360, the first thing you need to do is launch the program. This API is attended. It requires that you open the program and launch the scripts. I have suggested to the team at Autodesk to research and consider implementing unattended scenarios as well.

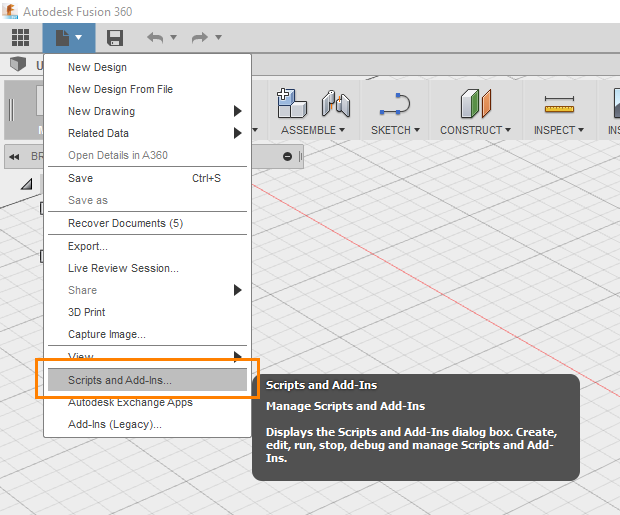

Now launch the Scripts and Add-ins… option from the File menu…

Don’t be confused by the Add-Ins (Legacy) option in the same File menu. That’s for an old system that you don’t want to use anymore.

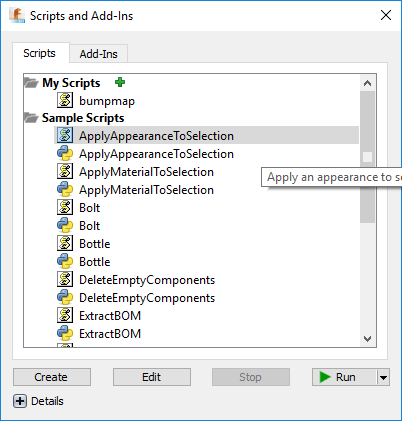

That should launch the Scripts and Add-Ins dialog…

There are two tabs - Scripts and Add-Ins. They’re the same thing except that Add-Ins can be run automatically when Fusion 360 starts and can provide commands that the user can see in their UI and invoke by hitting buttons. Add-Ins ask you to implement an interface of methods that get called at certain times. If you simply click the Create button on the Add-Ins page, it will make you a sample with most of that worked out for you already.

Let’s focus on the Scripts tab for now.

You’ll see a number of sample scripts in there. Some of them will have the JavaScript icon…  …and others will have the Python icon…

…and others will have the Python icon…

The Fusion 360 API supports 3 languages: C++, Python, and JavaScript.

Above those, you’ll see the My Scripts area that contains any scripts you have written or imported.

It’s not entirely clear at first how this works. Let me explain. If you click Create at the bottom, you’ll get a new script written in a strange folder location. It’s good because it gives you the right files (a .js file, an .html file, and a .manifest), but it’s bad because it’s in such an awkward location. The best thing to do in my opinion is to hit create and get the sample code files and then move the files and their containing folder to wherever you keep your code. Then you can hit the little green plus and add code from wherever you want.

One more nuance of this dialog is that if you click the Edit button, Fusion 360 will launch an IDE of its choice. I think this is weird and should be configurable. If I edit a JavaScript file it launches Brackets. I don’t use Brackets. I use Visual Studio Code. It doesn’t end up being that much trouble, but it’s weird.

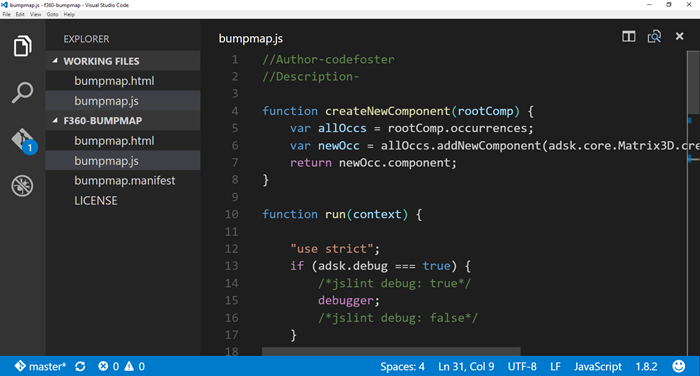

To edit my code, I just go to my command line to whatever directory I decided to put it in and I type…

code . |

That launches Code with this directory as the root. Here’s what I see…

There you can see the .html, .js, and .manifest files.

I’m not going to take the screen real estate to walk you entirely through the code. You can see it all on GitHub. But I’ll attempt to show you what it’s doing at a high level.

Here’s the code…

<style type="text/css">.gist {width:700px ;} |

Let’s break that down some.

The createNewComponent function is just something I made. That’s not a special function the API is expecting or anything. The run function is, however, a special function. That’s the entry point.

Essentially, I’m creating a 20x20 grid, prompting the user to select a body, and then doing a 2D loop to copy the selected body. The position is all done using a transformation that shifts each body into place and then offsets it a certain amount in the Z direction. In this case, I’m just using a random number, but I could very well be feeding data in to this and doing something with more meaning.

Watch this short video as I create a cube and then invoke this script on it…

So, here is where you just have to sit back and stare at the ceiling and think about what’s possible - about all the things you could generate with code.

My example was a basic, linear iterator. Perhaps, however, you want to create something more organic - more generative?

Check out this example by Autodesk’s own Mike Aubry (@Michael_Aubry) where he uses Python code to persuade Fusion 360 to build a spiral using the API.

That has a bit more polish than my gray cubes!

If you build something, make sure you toss a picture my way on Twitter or something. I’d love to see it.