Posts tagged with "iot"

Index of my Raspberry Pi Posts

I’ve been doing a lot of hacking on the Raspberry Pi, and I’ve written a few articles on the topic. I’ve assembled all of my posts here for easy access.

- Accidental Old Version of Node on the Raspberry Pi - I beat my head against a wall for a long time wondering why I wasn’t able to do basic GPIO on a Raspberry Pi using Node. Even after a fresh image and install, I was getting cryptic node error messages when I ran my basic blinky app.Lucky for me (and perhaps you) I got to the bottom of it and am going to document it here for posterity.

- The Most Basic Way to Access GPIO on a Raspberry Pi - I’m always looking for the lowest level understanding, because I hate not knowing how things work. On a Raspberry Pi, I’m able to write a Node app that changes GPIO, but how does that work? Turns out it’s pretty interesting. I’ll show you.

- Easy and Offline Connection to your Raspberry Pi - Getting a Raspberry Pi online is really easy if you have an HDMI monitor, keyboard, and mouse, but what about if you want to get connected to your Pi while you’re, say, flying on a plane?

- Wifi on the Command Line on a Raspberry Pi - How to configure wireless connections on your Raspberry Pi from the command line.

Help Me Design a Candy Dispenser

I’m going to keep much of my latest project under wraps for now, but I need your help designing one piece of it.

I’m looking to create a device that is small and light and will dispense one piece of candy at a time programmatically.

Here are the requirements…

- Light - no more than 1oz

- One at a time - it needs to dispense a piece of candy one at a time. If I have to constrain it to hold only round candy of a set size, then so be it, but ideally it would hold candy of varying size and shape. Ideally it will hold Runts bananas.

- It has to operate on a 3.7V power supply

- It has to be an electro-mechanical assembly that I can control with an IoT device (an easy req to fulfill I think)

- It should hold some reasonable number of candy pieces - say at least 10.

So far I’ve thought of the following…

- An Archimedes screw that “pumps” candy up from the bottom of a hopper (like this)

- A wheel that sits horizontal and on each incremental rotation lets one candy piece at a time fall into a hole in the top and lets another fall out a hole at the bottom

- A screw that sits on a horizontal axis that is loaded with candy pieces (one per screw rotation… not in a hopper) and pushes one piece out at a time when rotated 360 degrees. A bit like snack vending machines.

If you can come up with any other brilliant ideas, I’d be glad to hear them.

Thanks in advance.

3D Printed Tiny Sea Creatures

I hack in Microsoft’s Maker Garage whenever I can. The Maker Garage is a relatively new addition to The Garage, which you may already be familiar with. The Maker Garage is a space where Microsoft Employees can come build things - hardware things, electronic things, software things, any things.

I’m a recent convert to the hardware side. I studied a bit of both hardware and software in my undergraduate Computer Engineering course many years ago, but it’s been almost entirely software for me since then. This return to resistors, capacitors and NPN transistors is a lot of fun, and in my role as a Developer Evangelist at Microsoft, it’s excellent for engaging with beginners, young folks, and anyone looking for a bit of a change from the sometimes humdrum enterprise app engineering space (hand raised!).

My last project in the Maker Garage was not a typical Azure-backed, IoT project, however. This time the customer was my 3 year old son. My wife notified me that we needed some random little figurines to complete a birthday gift, and why should we mail order figurines from across the globe when we can print them?! That’s right… no good reason.

There are a number of 3D printers in the Maker Garage, but the most recent adoption is a Form 1+ lithographic printer by FormLabs.

I first learned about the Form 1 watching the Netflix special Print the Legend some weeks ago. If you have a Netflix subscription, I recommend the documentary.

The Form 1 does not use the more popular filament extrusion technique employed by most of the 3D printers you see in the headlines. Instead, it uses stereolithography. Instead of melting plastic filament and shooting it out of a nozzle, a stereolithographic printer builds your model in a pool of liquid resin. The resin is photo-reactive and cures when exposed to a UV laser. This means you can shoot a tiny spot in the resin and it will harden. That’s perfect for turning goo into a ‘thing’.

The ‘things’ I decided to print were sea creatures. My son likes them, and they’re easy to find on Thingiverse.

I found an octopus, a submarine (not a creature I understand), a crab, a ray, an angler fish, a seahorse, and a whale.

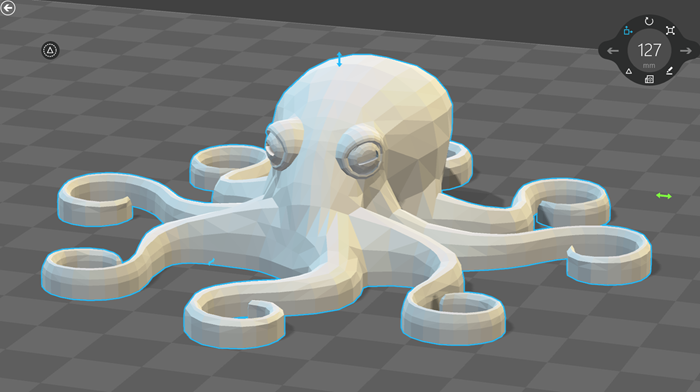

Here’s the model of the octopus opened in 3D Builder that comes with Windows - a very slick touch-smart 3D model viewer, editor, and printer driver…

You could intuit even if you didn’t know that 3D models, like 2D vector graphics, can be scale to most any size, but unlike 2D vector graphics, they don’t always maintain their fidelity. 2D vector graphics tend to fully describe shapes. A circle is described as a circle that renders fine when you set its diameter to a millimeter or a kilometer.

3D models in .stl format - the common format for 3D printers - on the other hand are meshes. These meshes can lose their fidelity when you scale your model up. In my case here, though, I’m scaling these guys way down, so quality is not a problem.

I use Autodesk Fusion 360 for my modeling, but in this case I was able to import the .stl files directly into the software FormLabs ships with the printer. It’s the best printer driver software I’ve seen yet from a manufacturer and allowed me to scale, orient, layout, and print all seven of my characters with no trouble at all. I wish I had a screen shot of the layout just before I hit print, but I didn’t save that.

I scaled each character to somewhere between .75” and 1” on its longest axis.

You have a chance to choose the resolution of your print, and your decision has a linear impact on the print time. I chose .1mm layers at an estimated 1 hour print instead of upgrading to .05mm and adding another hour.

And here are my results.

I was impressed.

Take a look at a closeup of the whale…

You can see the layering. That’s quite fine, and as I mentioned, it could have been double that had I had more patience.

The piece that really impressed me was the tiny seahorse…

That’s just an itty bitty piece and it’s looking so smooth and detailed. Check out the small snout, dorsel fin, and tail. The same is true with the octopus legs.

One of my favorite things about the output of a lithographic printer is the transparent material. I much prefer a transparent, color-agnostic piece to the often random filament color that you end up with in community 3D printers. You can get tinted resin for the lithographic printers too, but the results are still models that are at least translucent.

I hope you enjoy this report out. Feel free to add suggestions or questions in the comments section below.

Command Monkey

Alright, this is going to be fun. The process is going to be fun, and the end game is going to be even more so.

Let me paint a picture of the final product. I have a monkey toy on the table before me. I then hold my Microsoft Band up to my mouth and talk into it like Maxwell Smart. I say two simple words - “Monkey, dance!”

And in no time flat, the monkey toy obeys my command and is set into motion.

The whole thing reminds you of Tweet Monkey and it should. This is Tweet Monkey’s older and slightly more involved brother.

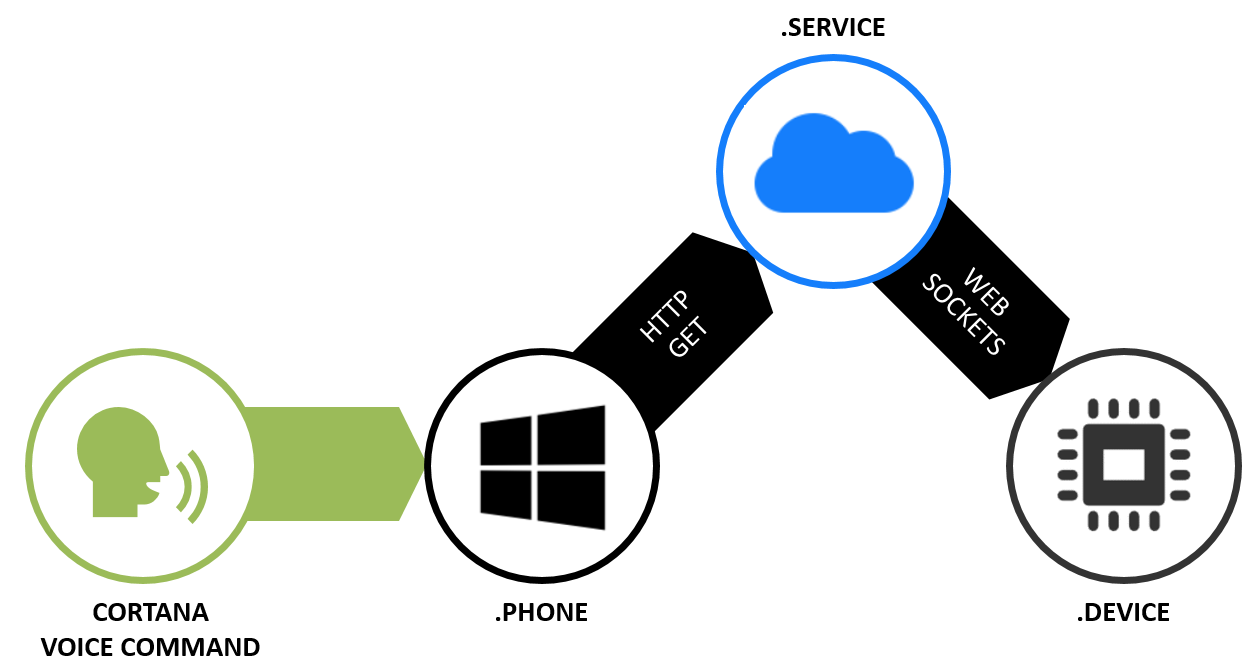

Where Tweet Monkey was a device to cloud scenario, Command Monkey is a Cortana to phone app to cloud to device scenario. Where Tweet Monkey relied on the Twitter Streaming API (which is very cool), Command Monkey involves our very own streaming API using web sockets.

I know it sounds like it’s going to be a lot of code, but it’s really not. You’ll see. Let me just say too that if for some reason you don’t have any interest in going through this step by step, then don’t. Just go grab the code on GitHub, because that’s how we roll.

I’m going to be using the free community version of Visual Studio and the Node.js Tools for Visual Studio. You could obviously use anything beyond an abacus to generate ASCII, so let’s not get opinionated here. Use what you love. It should go without saying that you’ll need Node.js installed to make this work. That can be found at nodejs.org.

I’m going to host my Node.js project in Azure and in fact, I’m going to get it there in a rather cool way. I’m going to use the cross platform CLI for Azure to create the site and then I’ll use git deployment to publish the app.

The architecture diagram is going to look something like this…

The part about speaking the command into a Microsoft Band just looks like special sauce, but you get that for free with a Band. The Band already knows how to talk to Cortana on your phone.

You will need to teach Cortana to talk to you app, so let’s do that first. Let’s build a Windows Phone app.

Building the Phone App

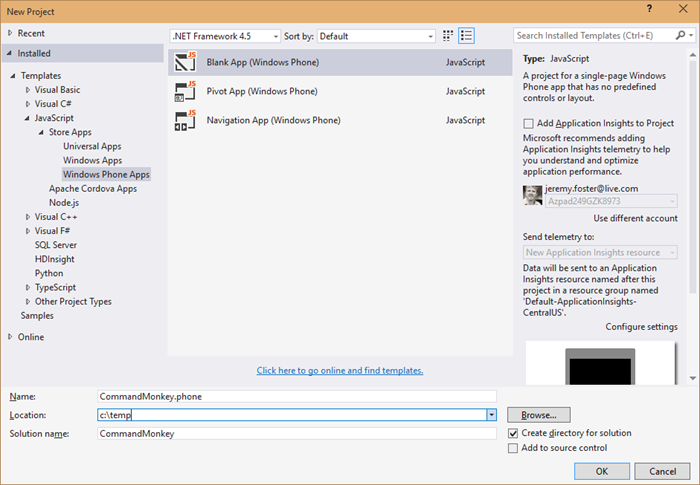

In Visual Studio, hit File | New | Project and create a blank Windows Phone App using JavaScript called CommandMonkey.phone. Call the solution just CommandMonkey. Like this…

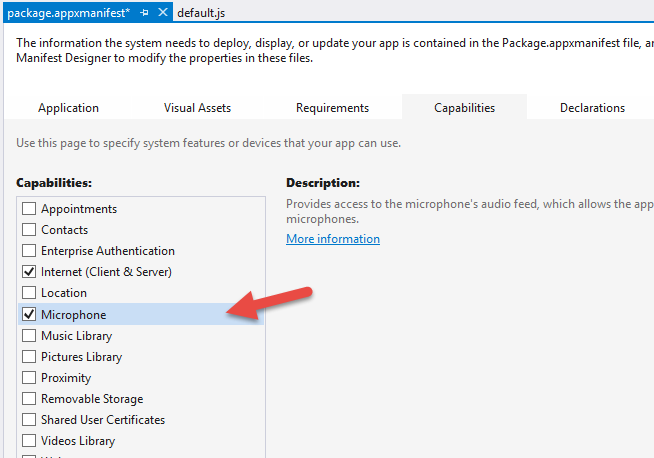

In order to customize Cortana, we need to define a voice command and that requires the Microphone capability. To add that, double click on your package.appxmanifest, go to the Capabilities tab, and check Microphone…

Now we need to create a Voice Command Definition (VCD) file. This file defines to Cortana how to handle the launching of our app when the user talks to her. Here’s what you should use…

|

The interesting bits are lines 6 and 9-14. The combination of the prefix with the command name means that we’ll be able to say “Monkey dance” and Cortana will understand that she should invoke the Command Monkey app and use “dance” for the command.

You’ll notice that I have a second command in the phrase list for the command - chatter. I don’t currently have a mechanical monkey capable of both dancing and chattering, but you can imagine a device capable of doing more than one action and so the capability is already stubbed out.

Next we need to register this VCD file in our phone app. When we do this and then install the app on a phone, Cortana will then have awareness of the voice capabilities of this app. She’ll even show it to the user when they ask Cortana “What can I say?” To do that, open the js/default.js file and add this to the beginning of the onactivated function…

var sf = Windows.Storage.StorageFile; |

And there’s one more step to completing the Cortana integration. We need to actually do something when our application is invoked using this voice command.

Still in the default.js, add this after the if statement that is looking for the activation.ActivationKind.launch

else if (args.detail.kind === activation.ActivationKind.voiceCommand) { |

Now let’s talk about what that does.

The if statement we hung that else if off of is checking to see just how the app was launched. If the user clicked the tile on the start screen, then the value will be activation.ActivationKind.launch. But if they activated it using Cortana then the value will be activation.ActivationKind.voiceCommand, so this is simply how we handle that case.

The way we handle it is to access the parsed semantic using the event argument and then to send whatever command the user spoke to the CommandMonkey.azurewebsites.net website.

How did that website get created you might ask? I’m glad you did, because we’ll look at that next. As for the phone project, that’s it. We’re done. It’s not fancy, and in fact if you run it, you’ll see the default “Content goes here” on a black screen, but remember, we’re keeping this simple.

Building the Node.js Web Service

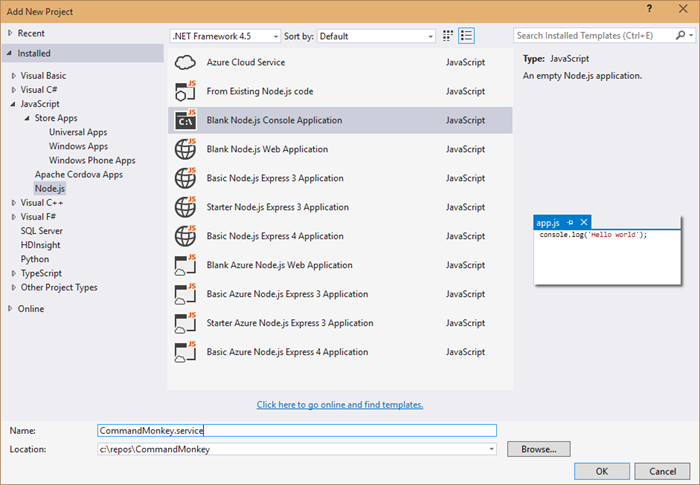

Visual Studio is able to hold multiple projects in a single solution. So far we have a single solution (called CommandMonkey) with a Windows Phone project (called CommandMonkey.phone).

Now we’re adding the web service.

Find the solution node in Visual Studio’s Solution Explorer and right click on it and choose Add | New Project…

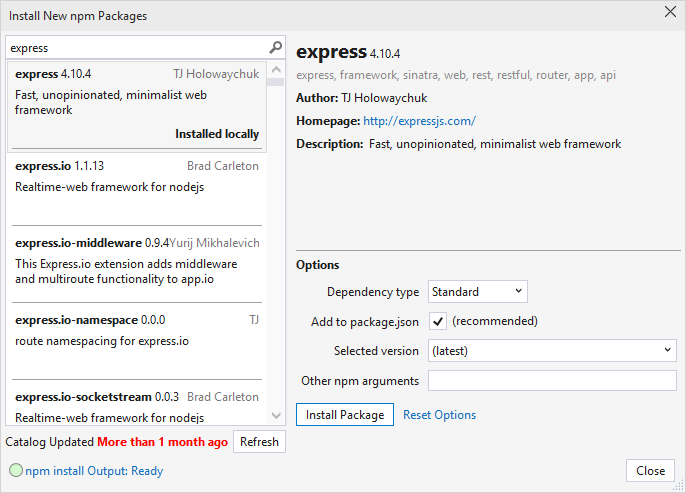

Now install the express and socket.io Node modules. You can either do it the graphical way or the command line way.

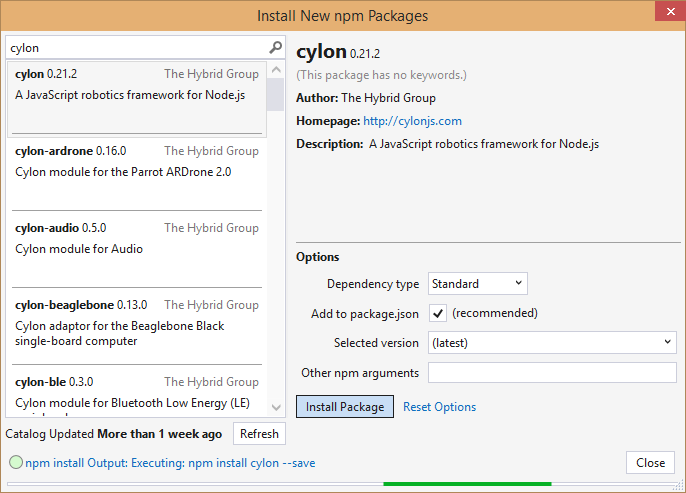

The graphical way is to right click on npm in the .service project and choose Install New npm Packages… Then search for and install express and socket.io. For each leave the Add to package.json checked so they’ll be a part of your project’s package.json.

Now the command line way. Navigate to the root of this project at a command line or in PowerShell and enter npm install express socket.io --save

Now open up the app.js and paste this in…

var http = require('http'); |

This is not a lot of code for what it’s doing. This is…

- creating a web server

- setting up web sockets using socket.io

- setting up a handler for when clients call the ‘setTarget’ event

- defining a route to /api/command which calls the “target” client with the specified command

That’s what I love about JavaScript and Node.js. The code is short enough to really be able to see the essence of what’s going on.

Okay, the service is done, but it’s still local. We need to get this published.

We’re going to use Azure Websites feature called git deployment.

There’s something interesting about our project though. The git repository would exist at the solution level and include all of our code, but the folder that contains the Node.js project that we actually want published to Azure is in a subdirectory called CommandMonkey.service. So we need to create a “deployment file”. To do that just create a file at the root of the project called .deployment and use this as the contents…

[config] |

And in typical fashion, we’re going to have a few files that we have no interest in checking in to source control, so make yourself a .gitignore file (again at the root of the CommandMonkey solution) and use this as the content…

node_modules |

Now git commit the project using…

git init |

Now you need to create an Azure website. Note, you need to already have your account configured via the Azure xplat-cli in order to do this. I’ll consider that task outside the scope of this article and trust you can find out how to do that with a little internet searching.

azure site create CommandMonkey --git |

And of course, you can’t use CommandMonkey, because I’ve already used that one, but you can come up with your own name.

The --git parameter, by the way, tells the create command to also set up git deployment on the remote website and will also create a git remote in the local repository so that you’ll be able to execute the next line.

To publish the website to azure, just use…

git push azure master |

…and that will push all of your code to a repository in Azure and will then fire off a process to deploy the site for you out of the CommandMonkey.service directory which you specified earlier.

There’s one thing you need to do in the Azure portal to make this work. If anyone knows how to do this from the xplat-cli, be sure to drop me a comment below. I’d love to know. You need to turn on web sockets. Just go to your website in the portal, go to the configuration tab, and find the option to turn on web sockets. Easy.

Whew, done with that. Not so hard. Now it’s time to write the Node.js app that’s going to represent the device.

Building the Device Code

As is often the case, the device project is the simplest project in the solution. It does, after all, only have one job - listen for socket messages and then turn the relay pin high for a couple seconds. Let’s go.

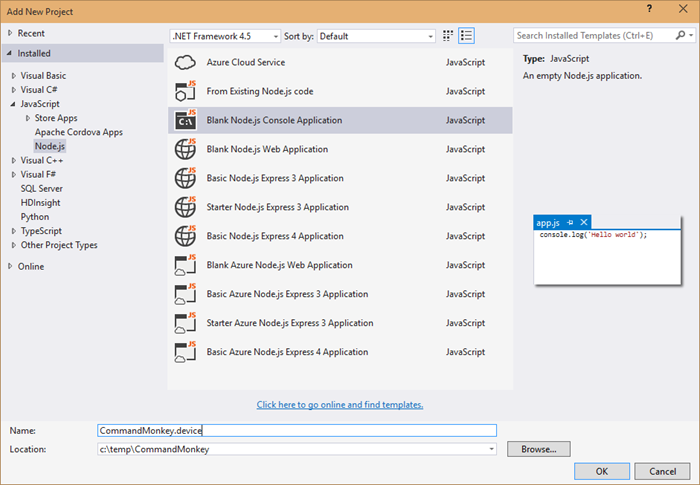

In Visual Studio, right click on the solution and Add | New Project… and add another blank Node.js app. This time call it CommandMonkey.device…

Using the technique above for installing npm packages, install the socket.io-client npm package.

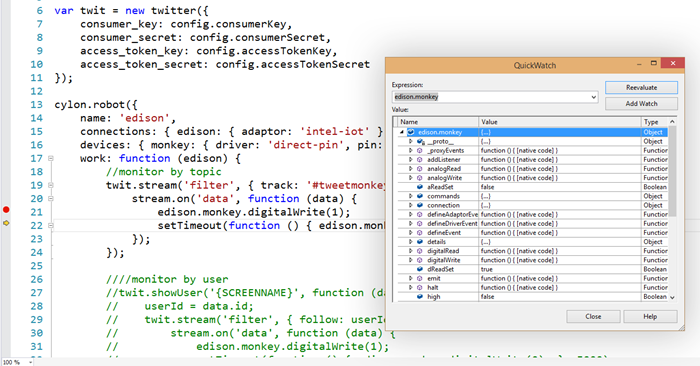

var cylon = require('cylon'); |

You can see that this code is connecting to our web service in Azure - CommandMonkey.azurewebsites.net.

It also requires and initializes the Cylon library so it can talk to the hardware in an easy, expressive, and modern way.

The Cylon work method is like Cylon’s ready method, and so that’s where we’ll invoke the setTarget event. This socket event request for the server to save this socket as the “target” socket. That just means that when anyone triggers messages on the server, this is the device that’s going to pick them up. You may want to create this as an array and thus allow for multiple devices to be targets, but I’m keeping it simple here.

Finally, it handles the ‘command’ event that the server is going to pass it and simply raises the digital pin for 2 seconds.

To make this work, you need to deploy this project to your device. I use an Intel Edison, but this will work on any SoC (System on a Chip) that will run Linux. I am not going to repeat how to setup the Edison or deploy to it. You can find that in my series of Intel Edison posts indexed at codefoster.com/edison.

Once you get the code deployed to the device, you then run the CommandMonkey.phone project on your phone emulator or on your device. I run mine directly on my device so I can talk to my Microsoft Band.

And that should pretty much do it. If I haven’t made any gross errors in relaying this and you haven’t made any errors typing or pasting it in, then you’re scenario should work like mine. That is… you hold the action button on your Microsoft Band and say…

“Monkey, dance!”

…and a very short time later you get a message with the command on the screen.

I hope you’re mind is awhir like mine is with all the ideas for things you could do with this. We have real time device to device communication going on, and users really get excited when they utter a command and a half a second later something is happening in front of them.

Go. Make.

I recorded a CodeChat episode with my colleague Jason Short (@infinitecodex) about Command Monkey. Here you go…

Making

I’m going to wander philosophically for a little bit about the very recent yet surprisingly welcome divergence of my job role.

Though the functions are much the same, the differences feel pronounced.

The primary job of a technology evangelist is to know technology, connect with people, and inspire.

That’s my definition at least.

I work hard to understand the concepts behind Microsoft technologies - our platforms, frameworks, applications, and libraries.

Then, I work to create genuine engagements with folks online and in person. The key word there is genuine. I’m not here to blow smoke. There’s no room in the industry for shills.

Then, I do my best to relay the bits about bits that will really turn people’s cranks. There really are a lot of developer concepts that make me go “whoa… that’s completely awesome.” I’m thinking about the first time I saw zen coding, the first time I saw Signal R, or the first time I wrote a Node.js app.

So what’s changing? Well, nothing and everything.

I’ll focus on the everything.

Think about three years ago. I mean architecturally speaking. Pretty different, right? The cloud as we know it was still nascent, and the Internet of Things concept was not really on most people’s minds. Fundamentally, app domains were narrow. Your total app was that code running on your client’s device, and perhaps included some cloud data and authentication.

We were still talking about the 3 screens that each person was going to have connected to the internet - not the 47 different gadgets, sensors, and other things.

Right now, we’re in an ambiguous time. Blogs and tweets about with decent speculations of what the imminent future of technology will look like, but I feel like a few bombs have dropped - 3D printing… boom!, cloud-based platform as a service options… boom!, and an unlimited number of device form factors… boom! - and everyone’s still trying to get the ringing out of their ears and adjust to their new surroundings.

That’s where I’m at. I’m resurrecting formulas I studied in my electronics degree decades in the past. I’m doing Azure training on new features weekly it seems. I’m trying to keep up.

When I on-boarded at Microsoft, they said it’s going to feel like drinking from a fire hose. It hasn’t stopped and someone turned the hose up on me. And don’t think I’m saying I don’t LOVE IT.

One unavoidable component of this modern evolution is what you might call the Maker Movement. It’s not new, but I think it has new steam. It could just be me, but I don’t think so.

What gives the maker movement its appeal? It’s a subjective question, but for me, it’s just a perfect definition of what we have been doing anyway - we’ve been building things. We’ve been making. Today, however, the convergence of a number of technology categories has enabled someone with the maker gene to step into a few categories.

I can do some design, make a site, a cross-platform app, an electronic device, and an enclosure. If I’m ambitious (which I am), I can build a UAV and strap it on, then fly it around the neighborhood. Then I can upload the design, the code, and the end video all to Instructables, and get good feelings from giving back to the community.

And all of these things have something in common. It’s making. It’s essentially taking chaos and turning it into order. The meaning of the order is determined by the producer and interpreted by the consumer, but it’s usually order (about half of the YouTube videos out there withstanding :)

It’s all pretty inspiring. I’m thinking again about those “Awesome!” moments. I’m thinking about when my colleague Bret Stateham and I pulled a creation out of the laser printer in the Microsoft Maker Garage the other day and then proceeded to look at each other and go “Awesome!”. I’m thinking about the first time I hooked into the elegance of Twitter’s Streaming API.

Putting a raw piece of plywood into a laser cutter and pulling out the intended shape is certainly the ordering of chaos, and so is writing code. It’s all just arranging atoms and bits into patterns that communicate something to the consumer.

Whether it’s code, wood, plastic, DC motors, or solder, it’s all media for making. It’s all awesome!

Using Visual Studio to Write Node for Devices

In previous blog posts, I explained how to setup your Intel Edison and how to start writing code for it. And in case you got here directly, I created a full index of my Edison posts to help you find what you need.

In this post, we’ll take a look at writing code for it using Visual Studio. Visual Studio, once you add the free Node.js Tools for Visual Studio plugin, happens to be pretty great at working with Node.js projects. It runs node behind the scenes, so it offer the following…

- Editing

- Intellisense

- Profiling

- npm

- TypeScript

- Debugging (local, remote, and Azure!)

That’s an impressive list, and we’ll take a look at a few of these features in this post.

Closer to the end of this post, I’ll tell you about my edref project. “edref” stands for Edison Reference project and is intended to get you started quickly with an Intel Edison project in Visual Studio. If you want to skip all the rhetoric and just download the reference project, I’ll understand.

Visual Studio

In case you missed the announcement in November, Visual Studio Community edition is now free. That’s huge. The Community edition is essentially the existing Professional edition, and facilitates a huge array of development scenarios. VS is intuitive, intelligent, and has a lot of Microsoft and community support behind it.

Installing Visual Studio Community 2013

To download Visual Studio, start at visualstudio.com, but know that visualstudio.com sort of has two meanings right now. Visual Studio is the IDE, but it’s also the ALM tools for taking any size projects from conception to completion with any size group of developers. So click the download button under Visual Studio the IDE and (at least at the time of this writing) you’ll get a direct link to the Visual Studio Community 2013 package.

Installation is pretty straight-forward, and I won’t include a screenshot series, but one decision you will have to make is which features of the product to install. Do you want to play with Lightswitch? Windows Phone? Just check the boxes.

The next version of Visual Studio is Visual Studio 2015 and there’s pretty good support for it already (including a working version of the Node.js Tools), so if you want to be a trailblazer at work, go for it.

The Node.js Tools for Visual Studio

Installing the Tools

The Node.js Tools are found at nodejstools.codeplex.com. Go there and click the gigantic, unmistakable DOWNLOAD button. Again, the download is assumedly self-explanatory.

Creating a New Project

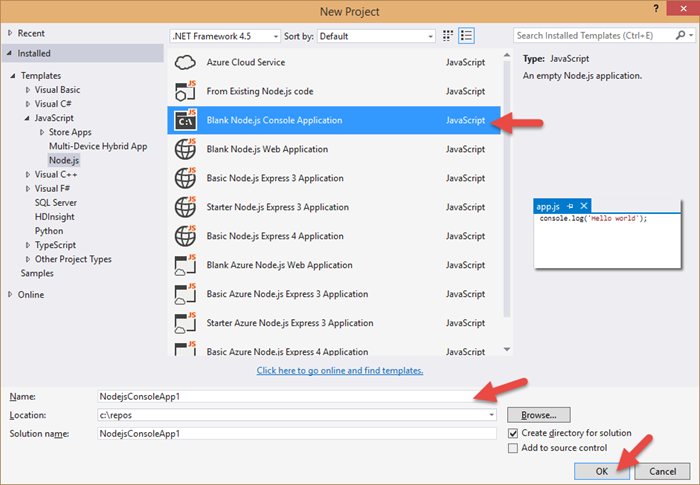

Creating a project using Node.js in Visual Studio begins like any other project type - with the File | New Project dialog…

There are a number of projects to choose from. For what we’re dealing with - writing Node.js for devices - the Blank Node.js Console Application will suffice. The rest are web applications for creating node apps on the server.

As per usual, give your project a name and location and OK away.

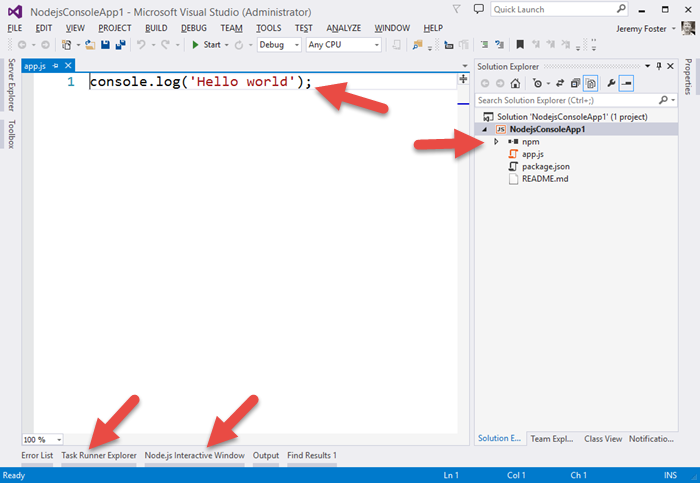

Now, when Visual Studio finished with our bidding, let’s take a look at what we’ve got…

Let me point out a few things.

First of all, perhaps the simplest Hello World app in the world :) Next, notice the npm folder in the project. This is going to give you a graphic way of managing your npm packages. Some people like to do things the graphic way. I personally never use this, but for some people it’s just your kind of thing. It actually works pretty slick too showing you all of your global modules, all of the modules that are installed, and visually differentiating the packages that have been added to your package.json file.

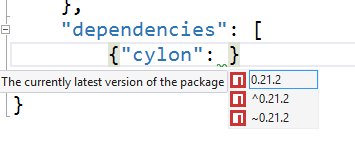

If you begin typing a dependency directly into the package.json, it gives you some Intellisense with an under-the-covers search of the npm repository, and it even helps you with the version…

I like that. I never type my dependencies in directly, but if I did, I’d certainly come to rely on it.

You can also use the npm dialog to install packages.

Again, nice, but I still keep a CLI open and execute npm install mypackage --save on my own. And of course, in that case, all of the installed packages are recognized by the tools, so I do enjoy having the visual indication that my package has been installed.

By the way, if you want to create a new Visual Studio Node.js project from an existing Node.js project that you have in your file system (perhaps recently cloned with git?) then check out my post Open an Existing Node.js Project in Visual Studio.

Using gulp for Deployment

We saw in the last post in this series how to wirelessly deploy your code to your device. You can keep doing it that way if you want, but there’s an easier way. Deploying your code is essentially a build task, and that’s what grunt and gulp are for.

NTVS also gives you a little bit of help with grunt and gulp. It’s hilarious that most people still refer to “grunt and gulp” whenever they talk about JavaScript task running frameworks. They do the exact same thing and I don’t imagine to many folks actually use grunt _and _gulp. Rather, most likely use grunt if they have to and gulp if they can. gulp is better hands down. grunt uses JSON files for configuration of tasks and gulp uses JavaScript. So, instead of composing gigantic JSON files to attempt to define what sort of tasks you need to run, you can stay in code and do whatever the heck you want or need. And gulp is supposedly way faster too, although I haven’t done any of my own tests.

Adding some gulp tasks to your app is easy. You just add a gulpfile.js to the project and add the gulp npm module both globally and at the project level.

The edref project has a little bit of gulp built in to deploy your code to your Edison device. Here’s that gulpfile.js content so we can talk about what gulp tasks look like…

/// <vs BeforeBuild='jshint' AfterBuild='deploy' /> |

Notice the actual gulp tasks (where I put the <———— indicators). Those define what tasks are configured to run.

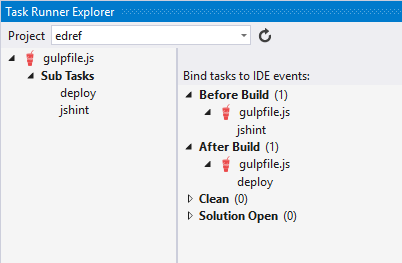

Now this next part I love. Visual Studio looks at the gulpfile.js at these tasks and adds them to a new pane called the Task Runner Explorer…

So jshint and deploy under Sub Tasks exist because they are defined in the gulpfile.js code.

Any time you need (or just want), you can right click one of those bad boys and hit Run and just run your tasks ad hoc individually. But also notice that under Before Build I have determined that the gulpfile.js/jshint task should run, and After Build the gulpfile.js/deploy task should run. That means that I can change a line of code, hit CTRL+SHIFT+B to build my project, and know that the targeted Edison (whether it’s on my desk or installed in a wall or gadget absolutely anywhere!) is going to contain the latest version of my project. That’s pretty awesome.

I’m working on another task as we speak that will fire off the node process for that app so your device will not only get its code, but start cranking on it as well.

Remote Debugging

Another scenario unlocked for us using the NTVS tools is debugging - even remote debugging. I’m thrilled about this one.

Note that you can also check out the official documentation for the remote debugging feature of the NTVS tools.

I did a little research online and found that most people working with devices are resorting to the classic console.log method of debugging. When you’re writing Wiring code for an Arduino, for example, you use a serial debug library that allows you to send messages back to the console in your Arduino IDE. You can then add something like…

Serial.println("Made it here!");

This reminds me of JavaScript development circa 1999 and by no means turns my crank.

What does turn my crank is running my project, perhaps watching a couple thing happen on my gadget, then hitting a breakpoint in my code in Visual Studio and being able to hover over any variables to see what their value is and hit F5 to continue. That’s an awesome dev scenario!

Here’s how to do it.

Write or open a Node.js project targeting your device.

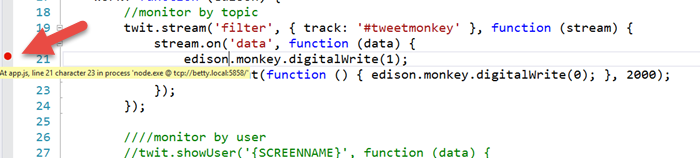

You can clone http://github.com/codefoster/tweetmonkey if you’re looking for inspiration. You can see more about my good friend TweetMonkey at codefoster.com/tweetmonkey, but in short, he taps into Twitter’s streaming API and waits for tweets with the hashtag #tweetmonkey. When he see’s one, he comes to life, nods his head, squeaks, and clangs his symbols. My 3 year old loves it :)

Make sure you have a RemoteDebug.js deployed to your device

I like to include the RemoteDebug.js file in my project and check it into source so it’s ready to go for anyone that clones the project.

If you have the NTVS tools installed, you have this file. To see where it is, go to Tools | Node.js Tools | Remote Debugging Proxy | Open Containing Folder. Copy that file to your project or deploy it directly to your device. By the way, the gulp task that I include with the edref project (see later) copies all .js files, so if you put the RemoteDebug.js file in your project, it will get deployed automatically.

Execute your node app

You normally execute your app by remoting to your device (using something like ssh root@mydevice.local), changing to your project folder, and then executing the line…

node app.js

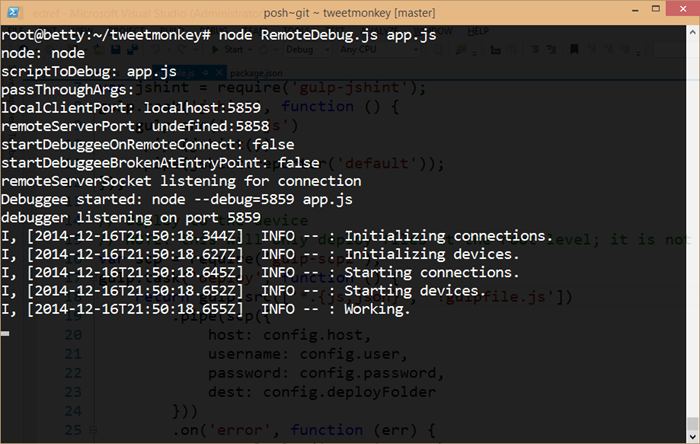

For remote debugging, you do it like this…

node RemoteDebug.js app.js

…and you should get some feedback like…

So you can see that the RemoteDebug.js file is launching a proxy for us using port 5858. And it introduces a term that was new to me - debuggee. I think I like it.

With that going, we’re ready for the next step.

Attach to Process from Visual Studio

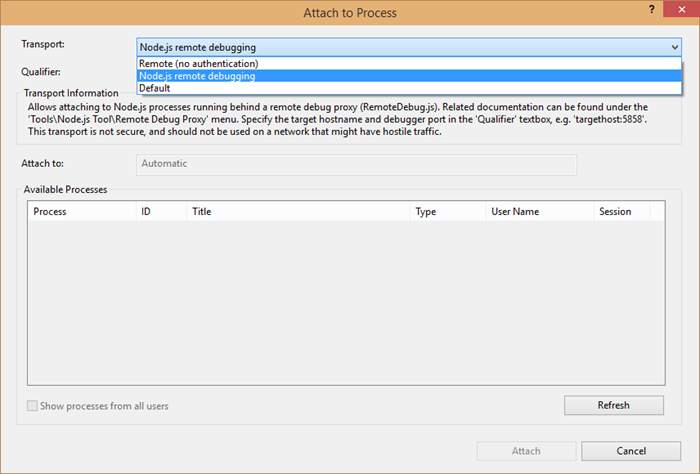

In Visual Studio, go to the Debug menu and Attach to Process… and change the Transport to Node.js remote debugging.

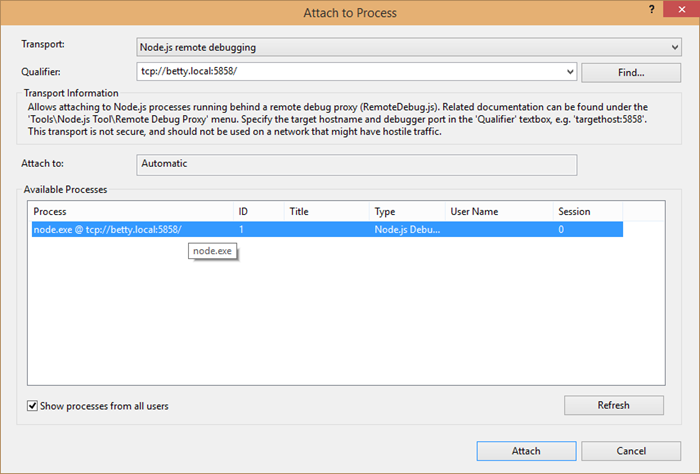

Now, in the Qualifier field, enter tcp://devicename.local:5858 and check the Show processes from all users box. You should see your node process appear in the Available Processes.

Attach.

Now set a breakpoint.

And in the case of the app we’re working with here (tweetmonkey), send a tweet to hashtag #tweetmonkey and you should break at your breakpoint. As the French say, “Formidable!”

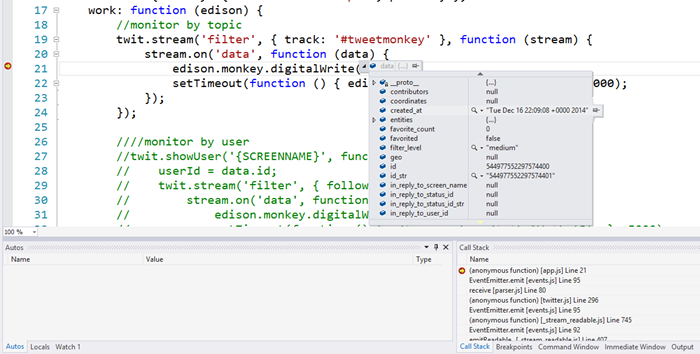

And, of course, in typical VS fashion, while broken, you can hover over JavaScript objects and check out all of their property values.

And you can open QuickWatch and enter any dynamic expressions you want and browse the results.

So, now you’re cruising!

The edref Project

There’s one last thing that I said I’d talk about, and that’s my edref project. There are a few things to do to set up a good, working Node.js environment in Visual Studio that will get you the kind of rich development environment I’ve lined out. It’s not a lot, but there’s not sense in doing it from scratch every time, so I’ve created a project called edref on GitHub that you can clone and get started right away. Feel free to fork and issue pull requests if you have ideas for how to improve it. I personally use this project for starting new hardware hacks, so when I find more efficient ways to do something, I’ll update the GitHub project.

The one thing I’d like to point out about the project is that the gulpfile.js is all set up to deploy your project to a remote device. You just have to fill out the config.js file with your particulars.

Conclusion

You should have what you need to not only write code for your Intel Edison, but write it in Visual Studio style! If you have any questions about this, please let me know in the comments. Consider this a living blog post still in that I’ll add edits here as I learn more about this fun device.

Happy hardware hacking!

The Intel Edison

I am playing with the Intel Edison a lot these days. I’m a big promoponent of it, because I’m a big proponent of both power and simplicity. This device has enough power for whatever maker, robotic, gadgeteer project I can conceive of, and it’s all set up to easily run JavaScript via Node via Linux. This means I can use higher level libraries like Cylon.js. This means if I want to turn a motor, it’s easy. If I want to read accelerometer data from an ADXL377, it’s easy. If I want to include a Node module for storing temperature and humidity data in Azure, it’s super duper easy. I like easy - just not at the cost of power.

I’ll stop being wordy, though and just index the posts that I’ve creating (and am still creating) for the Intel Edison. Here they are.

Getting Started with the Intel Edison should help you pull it out of the box, mount the device to the dev board, flash the latest OS to the device, and get your toes wet writing code.

Writing JavaScript for the Intel Edison intends to pick up where the former left off and should help you get started writing Node.js apps using Cylon.js for the device.

Using Visual Studio to Write Node for Devices will bring a bit of awesome to coding for devices. You’ll have a rich development environment including remote debugging so you can actually step through breakpoints and watch your gadget react!

Flashing an LED when you get a Tweet (up next) will use what we’ve learned so far along with a Node.js module to hook into Twitter’s streaming API to turn your LED on for a couple seconds when you get a tweet.

Tweet Monkey is actually similar to the flashing LED sample I was going to do, but more fun. Would you rather see an LED flash or a monkey clanging symbols?! The real value in this tutorial is the JavaScript that combines calling in to the Twitter Streaming API with the using of the cylon library to talk to the monkey.

Command Monkey is Tweet Monkey’s older brother. Command Monkey is a fun and full-featured scenario where Cortana on the Windows Phone is used to tell the monkey to dance. This tutorial will teach you a pretty wide scope. You’ll learn to integrate Cortana, write a Windows Phone app using JavaScript, write a Node.js service on Azure, and how to communicate down to the device (the monkey in our case) using web sockets. It’s surprisingly little code overall.

Network IoT Devices (queued) is all about getting devices to form local or extremely broad networks that form huge logical apps and scenarios.

So you can actually check out posts in progress before I’ve polished them and called them done. Do please feel free to engage in the comments and offer feedback, advice, or questions.

Writing JavaScript for an Intel Edison

In my last article about the Intel Edison, I showed you how to take yours out of the box, and get it set up. Consider this article a follow-on. I’m going to pick up pretty much where the last one ended.

We actually wrote a little bit of code in the setup article, so you got a taste. Now we’re going to learn…

- how to use the power of Node.js to bring in libraries and command our device

- how to deploy code to the device wirelessly (zoing!)

- how to use the excellent Cylon library to make our code elegant and expressive

Let’s start off in this article without employing the help of Visual Studio, and then my next article will capture the many productivity benefits of VS. It’s always nice to start at the base and climb up to awesome tooling. That way, we’re thankful for the conveniences it brings. :)

Using Node.js

Installing Node.js

I’m assuming your host PC is Windows. I’m running the technical preview of Windows 10 and it’s all working great. I like to live on the edge.

The easiest way to install Node.js is by simply clicking the big green button you’ll find at nodejs.org…

You’ll see in the installation wizard, that you’re actually getting the NPM utility installed along with the Node.js engine. The installation should take care of a lot of busy work for you such as adding node and npm to your path so you can actually call it from the CLI. My CLI of choice, BTW, is PowerShell. To be clear, I’m talking here about installing Node.js on your host PC. Node and NPM should already be installed on the device if you flashed it according to the instructions in the last post.

After you have Node.js installed, we’ll walk through a few steps to create a new node project on your host PC. When we’re done with that, we’ll turn our attention to getting that code over to the Edison and running it there.

Creating the Folder and File Structure

Node.js projects have a pretty well defined, convention based folder and file structure. They’re very simple too - all of the metadata is in one spot. I don’t know where you keep your development projects, but I keep all of mine under c:\repos and I’ll start there. Watch the following video as I create a new project folder, generate an app package description file (package.json), and then create my initial app.js file…

As you can see, the npm init makes the creation of our package.json file very easy. The package.json file describes your node project and has a few purposes - the two most prominent that I can think of are a) it provides the metadata necessary in case we end up publishing this package to the node package store and b) it defines the project dependencies so that when the project is copied somewhere else, a simple command is all that’s necessary to actually go out and copy in all binaries necessary for the app to work.

Writing the Code

Now, in keeping with a simple workflow at first, let’s open app.js in Notepad++, and fill out the following…

var mraa = require('mraa'); |

This code depends on the mraa module, but if you followed my setup guide and flashed the Intel with the latest image, then you already have it. The mraa module maps the C libraries for interacting with the Edison’s hardware (the GPIO pins, the I2C bus, etc.) to Python and to JavaScript.

The code simply creates a new pin out of pin 13. Pin 13 is the one that conveniently has an LED on the dev board, so we don’t even have to plug anything in to see it. It then sets the direction of that pin to out. And finally, it raises the logic level of the pin by writing a value of 1.

Deploying Wirelessly

We saw in the last guide how to SSH directly to our device. If you gave your device the name “betty” like I did, then you’ve been provided a DNS name of betty.local which represents the IP address that your device was assigned on the network. If you’re not sure the device or the DNS name are working, just do ping betty.local from the CLI (replacing ‘betty’ with the name of your device of course). I’ve actually started pinging to discover the IP address and then using the IP address explicitly for SSH and SCP, because it takes a little time for the alias to resolve but hitting the IP address directly is snappy.

First, we’ll get our code copied to the device, and then we’ll SSH to the device in order to execute the code. It is also possible to simply execute the node app.js command remotely with SSH, but it’s fun to see what’s actually happening.

In this video, I’m going to SSH to the device, make a directory for our hellonode app, then jump back to my host machine, copy the files to the device (and specifically to that project folder) using SCP, and then back to the device to execute the app. The workflow can be simplified a bit, but I’m not arguing at this point. It’s pretty wonderful actually.

Notice the syntax of the SCP command. It wants scp

You didn’t see anything happen there, but I did. My LED came on.

There are a few things that are cool about this. It’s cool that the mraa library preexists and makes communicating with the device pins so easy from a high-level language like JavaScript. Also, it’s great that we’re remoting and remote deploying our project wirelessly. This means that we can build something like an intelligent camera device, install it in a bird nest, and then remotely upgrade our software without disturbing the birds. That’s just the first example that came to mind.

Alright, now lets make our development really clean and easy by employing the CylonJS library.

Using CylonJS

CylonJS can be found at cylonjs.com. You won’t even need to go there to get the code, because we’re going to use NPM, but you may want to go check it out to get familiar with the project and eventually to research the documentation for whatever device and driver you’re using.

Let’s run through the steps to do something similar to our hellonode app above, but use the Cylon library.

In this video we will: create a project folder, create our app.js file with some sample code from cylonjs.com that will cause pin 13 to blink, generate a package.json file, install cylon.js via NPM using the --save parameter to add it as a dependency in our package.json file, then we’ll deploy to the device, and finally SSH to the device and execute the node program. You’ll actually see a video of the device with the LED blinking. Just a quick warning - we’re not only going to be holding off on Visual Studio at this point, but I’ve decided to use a CLI text editor for the hilarious factor as well as the convenience of showing everything in one window. I actually really like using the command line, but GUI’s are great too. As long as I don’t have to remove my hands from my keyboard, I’m a happy programmer.

EDIT: Thanks to Roberto Cervantes in the comments for pointing out a step that I should have called special attention to. In the following video, notice that after I

scpthe files to the device and thensshto the device, I execute the commandnpm install. This looks into thepackage.jsonfile that we just deployed to see what my project’s dependencies are, it goes out to the internet (to the NPM repository) to fetch the packages, and then it installs them. If you skip this step your app will have no idea whatrequire('cylon')means.

A few things to note about this video…

My

edit app.jscommand won’t work for you because that’s a special command line text editor that I use. I’m going to go ahead and assume you have your own favorite way to edit a text file. :)Note that installing the

cylon-intel-iotmodule added dependencies in the package.json for itself as well as for its dependencies. We actually never had to create thecylonmodule as a dependency. That happened implicitly because the cylon-intel-iot module knew that it needed it. That’s a cool thing about node modules - they each know exactly what they depend on and a singleinstallcommand recursively takes care of business.We copied over only the app.js and the package.json. We did not copy over the node_modules folder - you’ll notice that I deleted it before deploying. This is the right way to deploy project. You don’t check the

node_modulesin to source control and don’t copy it over in a deployment. Instead, you rely on the dependencies defined in your package.json file. On the deployment server, you executenpm installand if anything doesn’t get installed correctly, then you need to add it to yourpackage.jsonfile.

And now a bit about the CylonJS code. Here it is…

var Cylon = require('cylon'); |

Just like in our simpler hellonode app, we are doing a require right off the bat here. We are not, however, requiring the mraa library. Instead we’re requiring the cylon module. The cylon-intel-iot module will be invoked from within cylon and it will make calls to the mraa library. We risen up one layer of abstraction. The big benefit here is that we’re no longer writing device specific code. We could theoretically switch our Edison out for a Spark and it would just work.

The code block is, I admit, unnecessarily heavy if you’re just blinking a light, but ponder for a minute the elegance of this compared to the inevitable mass of imperative code that would occur were we doing anything more complicated.

There are a couple of Cylon terms that I should explicitly call out…

A Cylon robot is the device - the Edison or Beaglebone or Spheo or Tessel or any the other 32 or so devices that Cylon already has adaptors for. You define your robot with a connection and you use an adaptor (in the example it’s intel-iot).

A device in Cylon parlance is more like a component on the device. In our example above, the pin is a component and so it’s registered as a device. The important part of the device declaration is the driver. A driver is the interface that Cylon will use to interact with it. When the led driver is used, then the thing we’re calling led will have functions like .turnOn() and .turnOff(). This makes it so that our we only ever speak to our components with sensible language - you tell a servo motor .clockwise() and you capture a button press with .on('push',function(){}). Sensible is good in my book.

Besides declaring the connections and devices, we declare a function that is to run when the robot is finished with its setup. In this case, we’re using the built-in every function provided by the Cylon library to toggle the led every second. Easy peasy.

Well, folks, there you have it. This is the point in my learning that I felt like the gate was open and I was running free and wild. Oh, the myriad of devices to communicate with. Oh, the plethora of components. Oh, the possibilities.

I have a few more posts to write on this, but I’m keeping them all separate so I can get them out the door. All of these articles are indexed at codefoster.com/edison.

Please feel free to comment below if you have any trouble here. There’s a good chance I ran into the trouble you’re finding, and if I didn’t I’m willing to bang my head against the wall a bit with you - just a bit.

Setting up an Intel Edison

This is a guide to getting started with the Intel Edison.

Intel has published a guide for getting started with this device as well, but I wanted to get everything into one place, tell it from my perspective, and smooth over a couple of the bumps I hit on the way. Let me know with a comment below if you have any questions.

I like to keep things simple, so I’m going to help you get started with the Edison as easily as possible.

In a subsequent post, I’m going to show you how you can very, very easily start writing JavaScript to control your Edison. You won’t have to deal with Wiring code, you won’t have to install the Arduino IDE (argh!), and you won’t have to install Intel’s attempt at an IDE - Intel XDK IoT Edition. You’ll be able to use Visual Studio, deploy to the device wirelessly, and then have plenty of time when it’s done to jump in the air and click your heels together.

In another post, I’m going to show you how to use Azure’s Event Hub and Service Bus (via a framework called NitrogenJS) to not only do cool things on one Edison, but to do cool (likely cooler actually) things on multiple Edisons, other devices, webpages, computers, etc.

Introducing the Edison

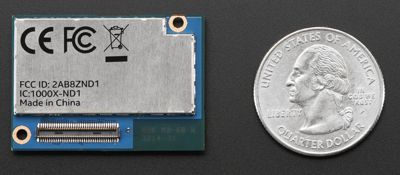

The Edison is tiny. It’s the size of an SD card. Despite its size, though it has built in WiFi and Bluetooth LE and enough processor and memory oomph to get the job done.

The design of the Edison is such that it’s pretty easy to implement a quasi-production solution. It’s not always easy getting a full Arduino board into your project, but the Edison is almost sure to fit.

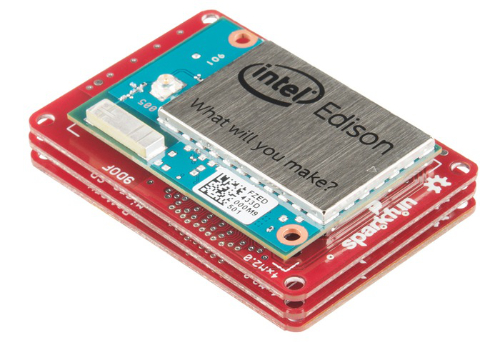

I’m not the only one that’s excited about this System on a Chip (SoC) either. Sparkfun.com is too. They made a great video introducing the technical specs of the Edison and showcasing their very cool line of modules that snap right on to the Edison’s body. Here you can see a few of those modules piled up to produce a very capable solution…

That’s the kind of compact solution I’m talking about. The only problem is that at the time of writing, the modules are only available for pre-order.

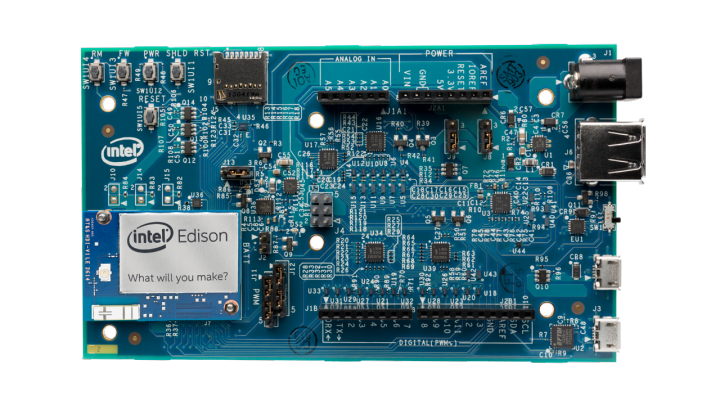

Not to worry though, there are a couple of other ways to interface with the Edison and get working on your project. The recommended way is by using the Arduino dev board. This is what the Edison looks like when it’s snapped to the dev board.

This dev board explodes all of the functionality packed into the Edison and makes life easy. It gives you USB headers, a power plug, GPIO (general purpose input/output) pins, a few buttons, and a micro-SD card slot.

There’s another dev board available for the Edison - a mini board - but it’s less used because it requires soldering and doesn’t offer as many breakouts. If you’re looking for compact, you can just wait for the Sparkfun modules I mentioned. Here’s the Edison mounted on its mini board…

In this guide, I’ll take you end to end with getting the Edison setup on a Windows machine. I am not prepared to detail instructions for Mac/Linux, but Intel did a good job of that on their guide anyway. I’m going to mention the physical setup of the device, installation of the drivers (on your host PC running Windows), then walk you through flashing it with Intel’s custom Yocto Linux image and training it to connect to Wifi. When we’re all said and done, we’ll never have to plug the Edison into our host PC via USB again. We’ll be able to wirelessly deploy software and otherwise communicate with the device. Finally, we’ll write a simple bit of code, but in a follow up post, we’ll get crazy with software, because that’s what we do.

Physical Setup

Physically setting up the Edison once you’ve pulled it out of the box is pretty straight forward. I’ll assume you’re starting with the Arduino dev board.

Add the plastic standoffs to the dev board if you’d like. They’re good for keeping Edison’s sensitive underbelly from touching anything conductive and creating a dreaded short.

Next snap the Edison chip onto the board. You can put the tiny nuts onto the posts to hold the chip on, but I choose to rely on the solid friction fit instead.

Flashing

Before your Edison is going to do anything exciting, you’ll need to connect to it and flash it with the latest version of the Yocto Linux distribution that is provided by Intel. At first, it has no idea how to connect to a Wifi hotspot, so we’ll have to establish a serial connection. It’s quite easy actually.

Intel’s guide does a decent job of walking you through these steps, but again, I’m going to do it on my own to add my own perspective and lessons learned.

The guide asks you to plug in both USB cables. That will work, but it helps to understand why. One of the USB micro plugs (the one closest to the power plug and the larger USB port) is a host plug that will a) power the Edison via USB and b) will cause a certain directory from its file system to appear in Windows Explorer as a drive. The other USB micro plug (the one closest to the edge of the board) is a serial connection that will allow you to establish a 115200 baud serial connection before Wifi is established.

What I prefer to do is power the Edison with a barrel connector, and then just use one USB cable plugged in to whichever header I need. This works well especially since one of them is only need for one step upon initial setup - to flash the device. I have a USB to barrel connector, so I can simply plug my Edison into a USB battery pack and emphasize that it’s completely wireless.

Do make sure the itty bitty switch next to the USB micro plugs is switched toward the USB micro plugs.

Download and install FTDI drivers. First, you need to download and install the FTDI drivers which allow your host computer to communicate with the USB header on the Edison. The file you download (“CDM…”) can be directly executed, but **I had to run it in compatibility mode **since I’m running Windows 10. I won’t insult your intelligence by telling you how to hit Next, Next, Finish.

Download Intel Edison Drivers. Now you need drivers for RNDIS, CDC, and DFU. It sounds hard, but it’s not. Go to Intel’s Edison software downloads page and look for the “Windows Driver setup”. This downloads a zip file called IntelEdisonDriverSetup1.0.0.exe. Execute the file and comlete installation. The download goofed up for me in IE11 on Windows 10, so if you run into that, go to the link at the bottom of the page that says it’s for “older versions”. The download for that same file is there and it’s not an older version.

Note: In case you want to know, the RNDIS (Remote Network Driver Interface Spec) is for virtual Ethernet link over USB, the CDC (Composite Device Class) is a standard for recognizing and communicating with devices, and DFU (Device Firmware Upgrade) is functionality for updating firmware on devices.

**Copy flash files over. **With these two driver packs installed, you should have a new Windows drive letter in Explorer called Edison. This is good, because that’s where you copy the files that will be used to flash the device. Go back to the Intel Edison software downloads page and locate and download “Edison Yocto complete image”. Save it local and unzip it. Now make sure the Edison drive in Windows Explorer is completely empty and then copy the entire contents of the zip file you just downloaded into that drive.

Connect to the device using serial. Since we’re finished copying files to the device and ready to connect to it over serial, we need to switch the USB cable from the inner port to the outer port. Do make sure you have the USB to barrel connector in place so you get the reassuring power light on the board.

The Intel guide walks you through using PuTTY (a Windows client for doing things like telnet, SSH, and serial connections). I’m a command line guy, so I use a slightly different approach using PowerShell which I’ll present. You can choose which you like better.

Go to the PuTTY download page, download plink and save the resulting plink.exe file into some local directory. I use c:\bin. Now you’re ready to use PowerShell to connect to a serial port. Very cool.

Note: plink in PowerShell does something goofy with the backspace key. It works, but it renders

?[Jfor each time it’s pressed. If you know a way around this, let me know.

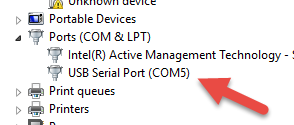

Before you connect, you have to see what the COM port is for the Edison. Go to Device Manager and expand the Ports (COM & LPT). Now look for USB Serial Port (COM_X_). Mine is COM5.

Here’s the line I use to connect (actually, I put a plink function in my $profile to make it even easier… ask me if you want to see how)…

. c:\bin\plink.exe -serial COM5 -sercfg 115200,8,1,n,Namely |

…if you saved your plink.exe into a different location or if your COM port is different then change accordingly.

Execute that line and then hit enter a couple of times. You should find yourself at a root@edison:~# prompt. That’s good news. Your on the device!

Initiate the flash. To flash your Edison using the files you copied into the drive, you simply type reboot ota and hit Enter. Watch your device do a whole bunch of stuff that you don’t have time to understand, and rejoice when it finishes and returns you to the login prompt. Your login is root and you don’t have a password. You should be sitting at root@edison:~# again.

Setup wireless. You have a full Linux distribution flashed to your Edison now, and it’s ready to talk Wifi. You just have to configure a couple things. Namely, you have to a) give your Edison a password (technically, provide a password for the root account), b) make sure your host PC is on the same network as your Edison, and c) provide your SSID and password. The first point is one thing the Intel guide skips - the need to set a password on the root account. If you don’t do it, you won’t be able to SSH to the device over Wifi. To initiate, execute configure_edison --setup. You’re prompted for a device name. I like to name mine (mine are called Eddie and Betty) so they’re easier to differentiate in terminal windows. I provide easy passwords for mine, because I’m not exactly worried about getting hacked. Next, you’ll be prompted to setup up Wifi. Follow along, choose the right hotspot, and enter the Wifi password when prompted.

Connect to the device using SSH over TCP/IP via the Wifi. Let’s get rid of this silly hard link and serial connection and join the modern era with a Wifi connection. Disconnect from the plink connection using CTRL+C. You can pull that USB cable out of your Edison’s dev board too. Your Edison is now untethered! Well, except for power, but a battery could take care of that.

Now again, I’ll diverge from the Intel guide here so I can stick with the command line. I use Cygwin on my box to allow me to SSH via PowerShell. I like it a lot. EDIT: Thanks to @palermo4 for pointing out that it’s not Cygwin that’s giving me this functionality, but rather my install of GitHub for Windows and the fact that I subsequently added GitHub’s bin folder to my path in Windows. Simply install GitHub for Windows and then go to your Environment Variables in Windows, edit the PATH variable, and append your bin directory to the end. On my system, it’s at C:\Users\jerfost\AppData\Local\GitHub\PortableGit_7eaa994416ae7b397b2628033ac45f8ff6ac2010\bin; I’m guessing yours is different :) After adding this, test it by typing SSH<Enter> in PowerShell and see if you get something besides an error.

You can use this approach, or you can go back to the Intel guide and use the PuTTY approach.

Before you can SSH to your device, you have to figure out what it got for an IP address. But the Edison has done something nice for you there. It created name.local as a DNS entry, so to connect to an Edison with the name eddie, you can simply use eddie.local. Mine is resolving to 192.168.1.9. If this doesn’t work for you, you can always log in to your router and find out what IP address was assigned to it or you can go back to the serial connection and type ifconfig at the prompt and look for the wlan0 network and see what address was assigned.

From my PowerShell prompt, I can just type ssh root@eddie.local. You’re connected to your Edison over Wifi now, and you’re so very happy!

Ready to Write Code!

From here, you’re ready to write some code. I’m going to write an extensive blog post on the topic, but for now, let’s get at least a taste.

First, make sure you’re SSH’ed to the device. So run that same ssh root@eddie.local command and sign in with your password.

Hello World! First things first. Let’s greet the world using JavaScript (via NodeJS). Type node and hit Enter and you should be at a > prompt. You’re in NodeJS… on your Edison. Man, that was easy.

Now type console.log('Hello World'); and hit Enter. There you have it.

Hit CTRL+C twice to get back to your Linux prompt.

Blink the light, already! You haven’t gotten started with an IoT device until you’ve blinked an LED, so let’s get to it. Still SSH’ed to your device, execute each of these lines (each at the prompt) followed by Enter…

node //enter NodeJS again |

And this is full-on JavaScript, so you can go crazy with it (recommended). Try this…

var state = 0; //create a variable for saving the state of the LED |

The light should be flashing on and off, but your state of bliss should be sustained high!

It’s obviously not sustainable to write our code at the prompt, so stay tuned for my next guide on writing code for your Edison.

Have fun!

I had one gripe with the Microsoft Band... Now I have zero.

For the most part, I’ve been really enjoying the Microsoft Band that I bought the day they went on sale.

Like the rest of the world, I learned about the landing of some new Microsoft Health apps in the various app stores on Wed, Nov 29, and like the rest of the world, was thrilled to hear the next morning that they were available for sale. Serindipidously, I had broken my last watch (of 8 years!) only weeks before, and was holding out on buying a new one in case we released something. Then we did, so I was ready to buy.

Since my purchase, I have been continuously pleased to have certain bits of information on my wrist. I’ve never really had any useful information on my wrist before. Sure, I had a Casio Data Bank watch in the 80’s like any good geek.

But I’m talking about useful information. The difference between a helpful computer and a truly useful computer, in my opinion, has always been connectivity. A computer without connectivity is a glorified calculator. So there’s a big difference between my Data Bank and my new Band.

But I had one gripe.

My Band was not glancable. When I glanced at my Band, I saw a black screen. I assumed this was a concession that just had to be made because of battery, and I was okay with it, but I was slightly disappointed on a regular basis that I had something on my wrist, but was not able to have a glance and note the time. It was not just disappointing, but somewhat disorienting, since this glancing was a strong habit from the last 8 years with a normal watch.

There was one more caveat to my gripe. I didn’t have any way that I could find to see the date. Seeing the current date is a major use case for me. When I sign something at a register, I don’t have time to actually think about what the current date is, I like to simply have a quick look at my watch. The Band, however, didn’t have this information for me - it was just a black screen.

Sure, I found watch mode in the settings, but immediately made the wrong assumption. I assumed that watch mode was going to keep the screen on full all the time. Intuitively, that would be an unacceptable battery drain, and likely a bit of a distraction. Even if the screen were to remain on all the time, it wouldn’t solve the second part of my gripe - information about today’s date.

Recently I actually gave watch mode a try and am absolutely thrilled to realize that both of my problems are solved.

Certainly there will be an amperal impact to keeping certain pixels white 24/7, but my intuition tells me it won’t be much and my colleague Tobiah Marks tells me he’s been using watch mode and still gets at least 1.5 days. I don’t have any trouble, as I initially thought, finding time to charge my Band, so this is absolutely fine with me.

Watch mode actually works a bit like the much-loved glance feature on Microsoft’s Lumia phones - it blacks the screen and shows the time in white. In the case of the Band, it also shows the date. Now I have the time and date at a glance all the time and that makes me super happy. When I wake the Band up with a power button press, I still see the date on the main screen in place of the steps. In essence, then, watch mode has told my Band that I use my device a bit more like a watch than a fitness band. Which is correct in my case. I’m thrilled to have all of the fitness features, but primarily I want a watch that makes me feel like I’m on the Starship Enterprise.

Beam me up, Cortana.